I. Intro

Date: May – June 2024

Codes are on github, click here.

It took me several days to install Ubuntu on Raspberry pi 4B. The main issue was there were no displays on the monitors, so I don’t know if it’s because of the cable, the sd card, or the image. I tried formatting different sd cards with different methods, and etching the image from different sources to different sd cards with different etching software. After I tried on two TVs and two PC monitors, finally the last one worked. The following code is what I figured out for three days. 1080p doesn’t work with my monitor, it flashes constantly, so I had to use 720p.

[all]

kernel=vmlinuz

cmdline=cmdline.txt

initramfs initrd.img followkernel

[pi4]

max_framebuffers=2

arm_boost=1

[all]

# Enable the audio output, I2C and SPI interfaces on the GPIO header. As these

# parameters related to the base device-tree they must appear *before* any

# other dtoverlay= specification

dtparam=audio=on

dtparam=i2c_arm=on

dtparam=spi=on

# Comment out the following line if the edges of the desktop appear outside

# the edges of your display

#disable_overscan=1

#overscan_left=20

#overscan_right=20

#overscan_top=20

#overscan_bottom=20

# If you have issues with audio, you may try uncommenting the following line

# which forces the HDMI output into HDMI mode instead of DVI (which doesn't

# support audio output)

# hdmi_safe=1

hdmi_drive=2

hdmi_force_hotplug=1

hdmi_ignore_edid=0xa5000080

hdmi_group=2

hdmi_mode=85

config_hdmi_boost=11

[cm4]

# Enable the USB2 outputs on the IO board (assuming your CM4 is plugged into

# such a board)

#dtoverlay=dwc2,dr_mode=host

[all]

# Enable the KMS ("full" KMS) graphics overlay, leaving GPU memory as the

# default (the kernel is in control of graphics memory with full KMS)

# dtoverlay=vc4-kms-v3d

# Autoload overlays for any recognized cameras or displays that are attached

# to the CSI/DSI ports. Please note this is for libcamera support, *not* for

# the legacy camera stack

camera_auto_detect=1

display_auto_detect=1

# Config settings specific to arm64

arm_64bit=1

dtoverlay=dwc2

I don’t remember which etching software and image I used for Ubuntu 23 because the last ROS version only works on Ubuntu 20 and I had to reinstall Ubuntu 20.04 server version! And then somehow I installed the desktop to the server version (followed in this link).

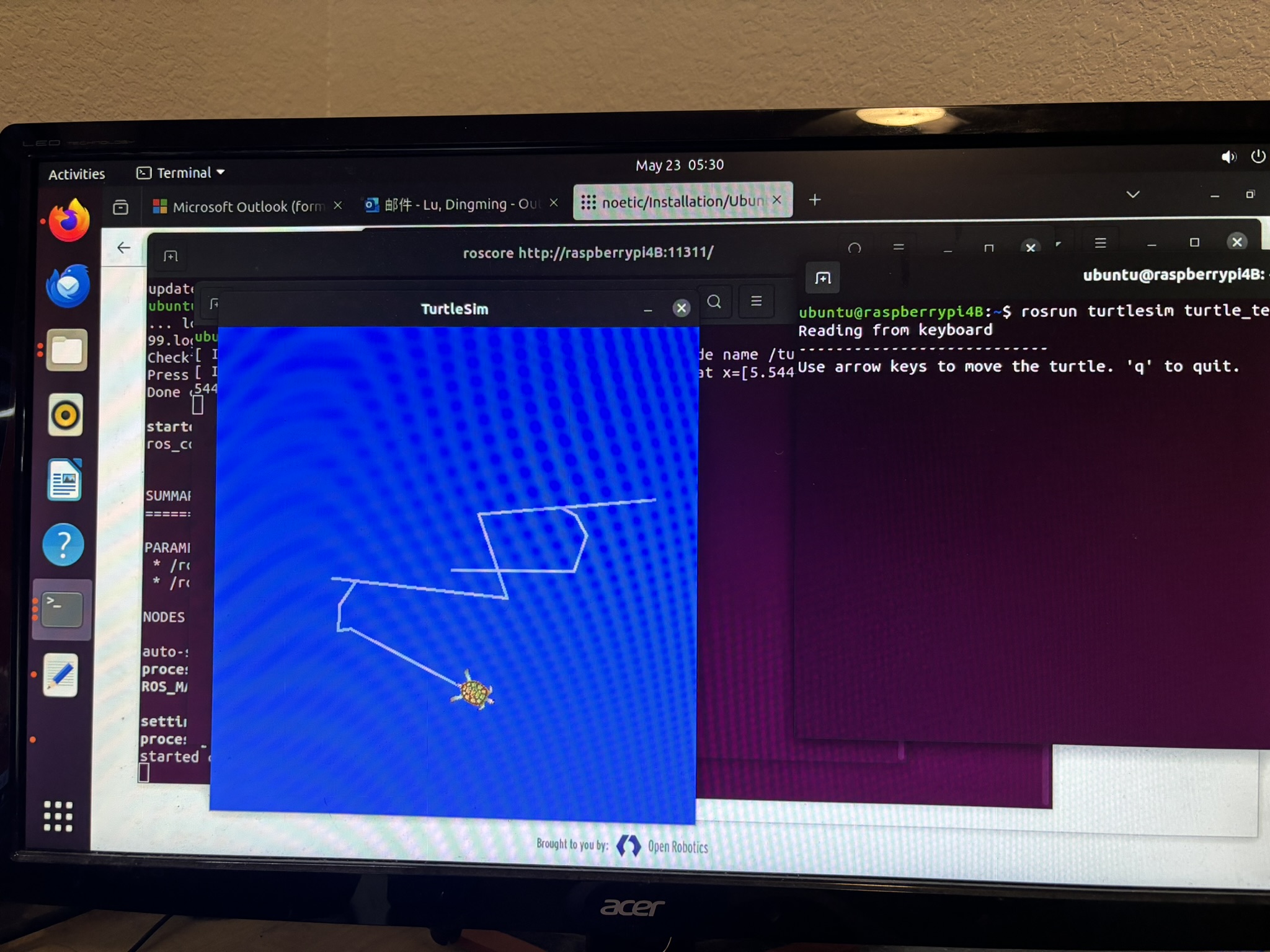

Then it took me another 16 days to learn ROS, from topic to SLAM.

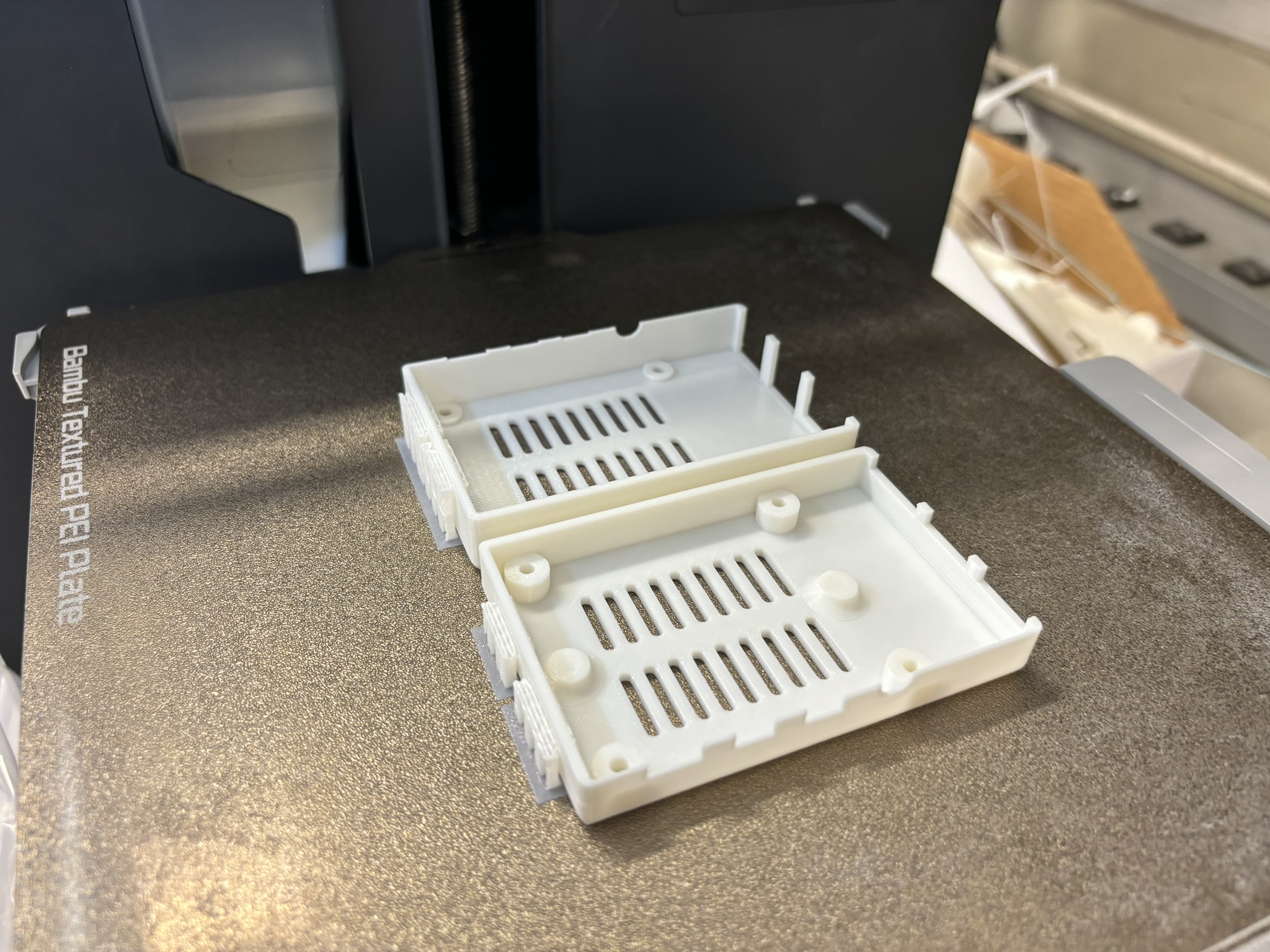

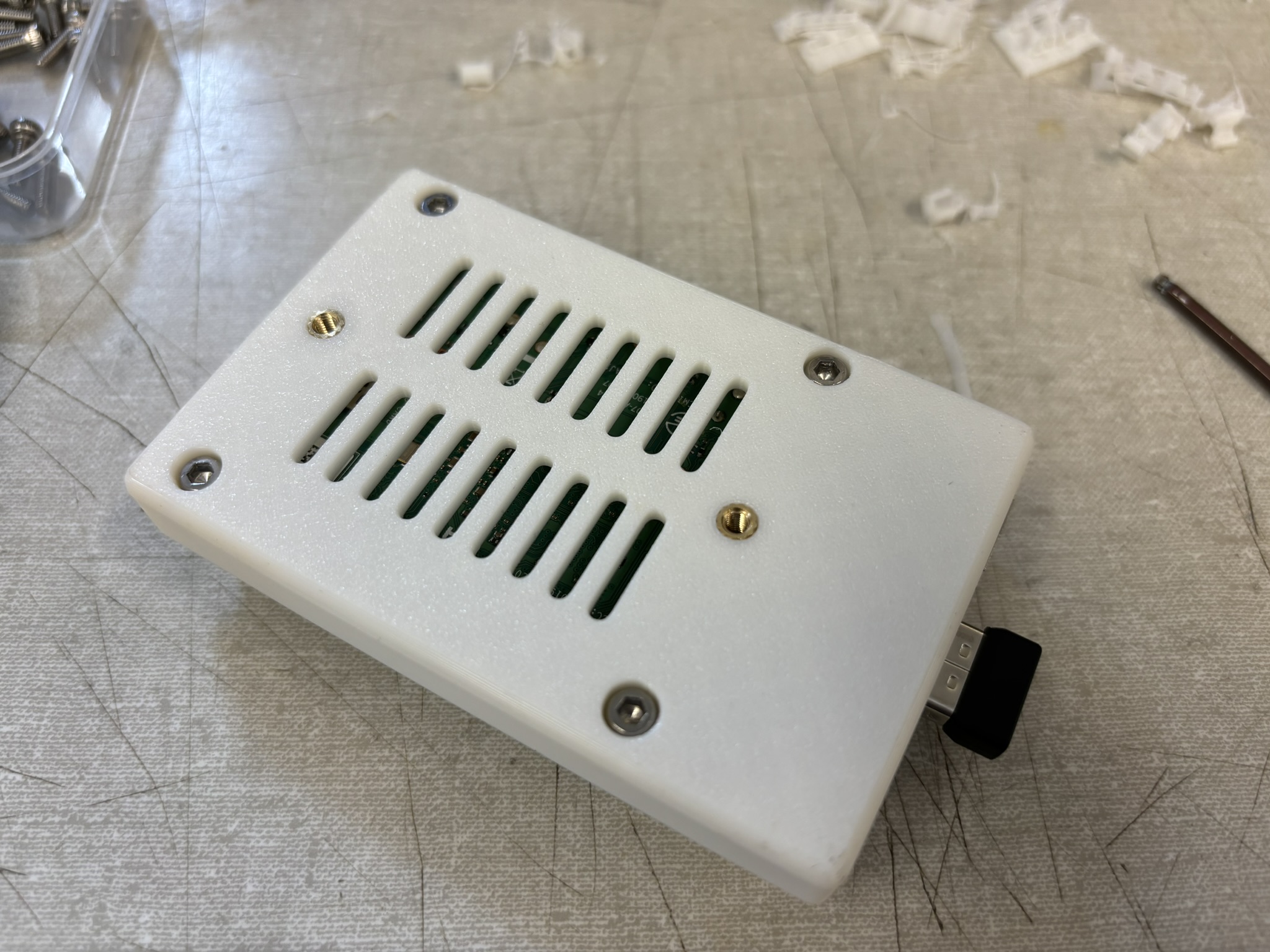

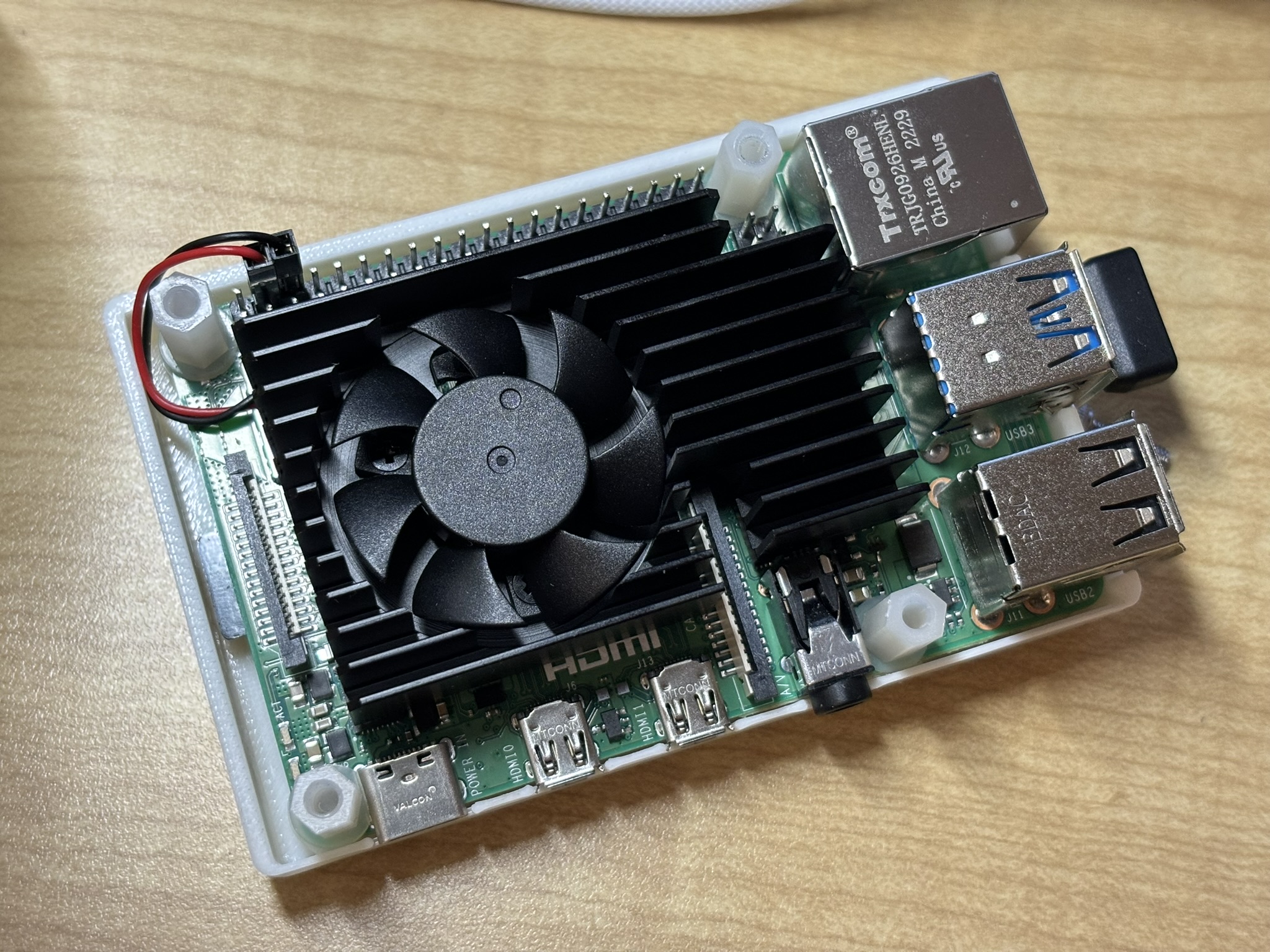

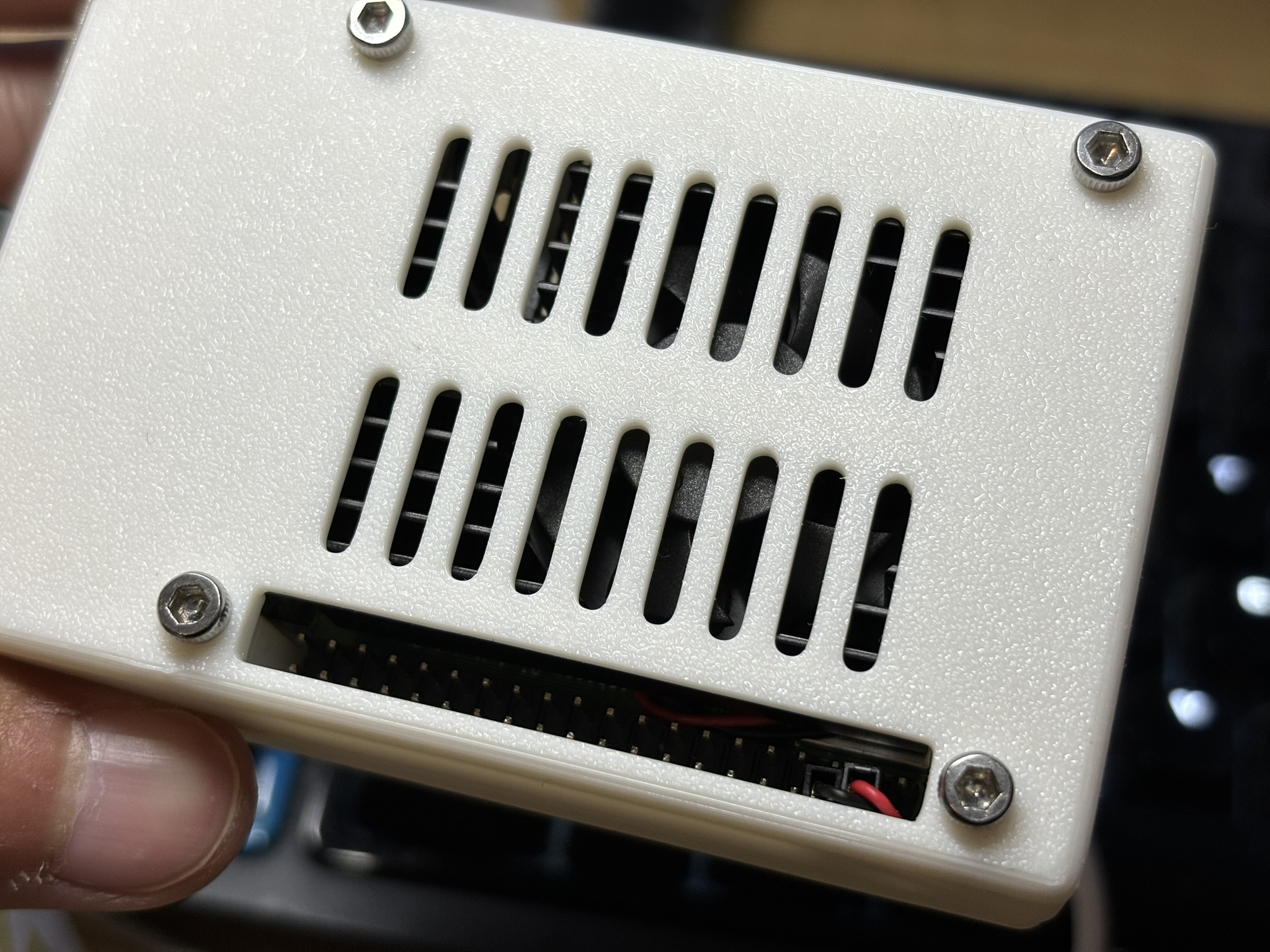

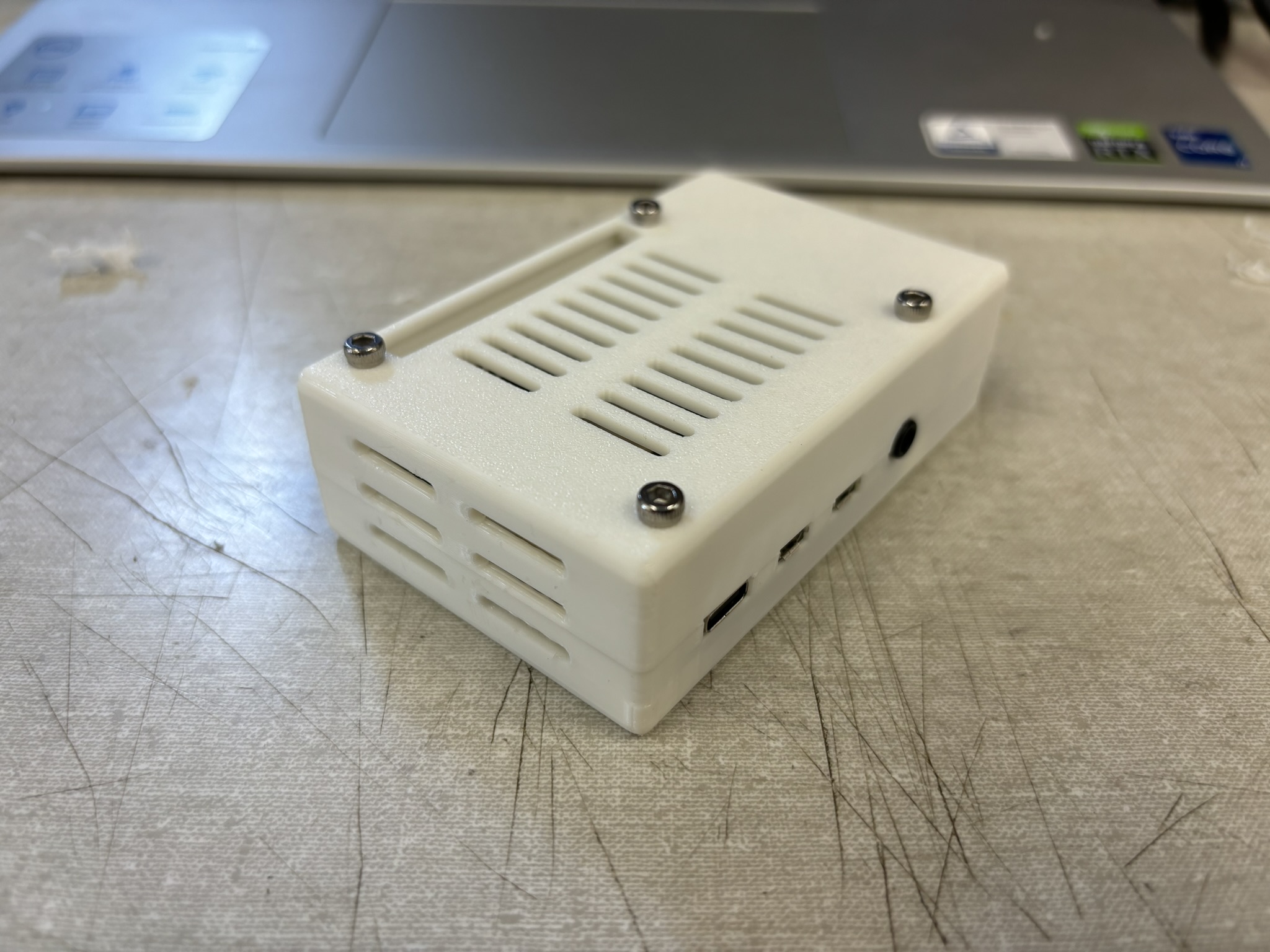

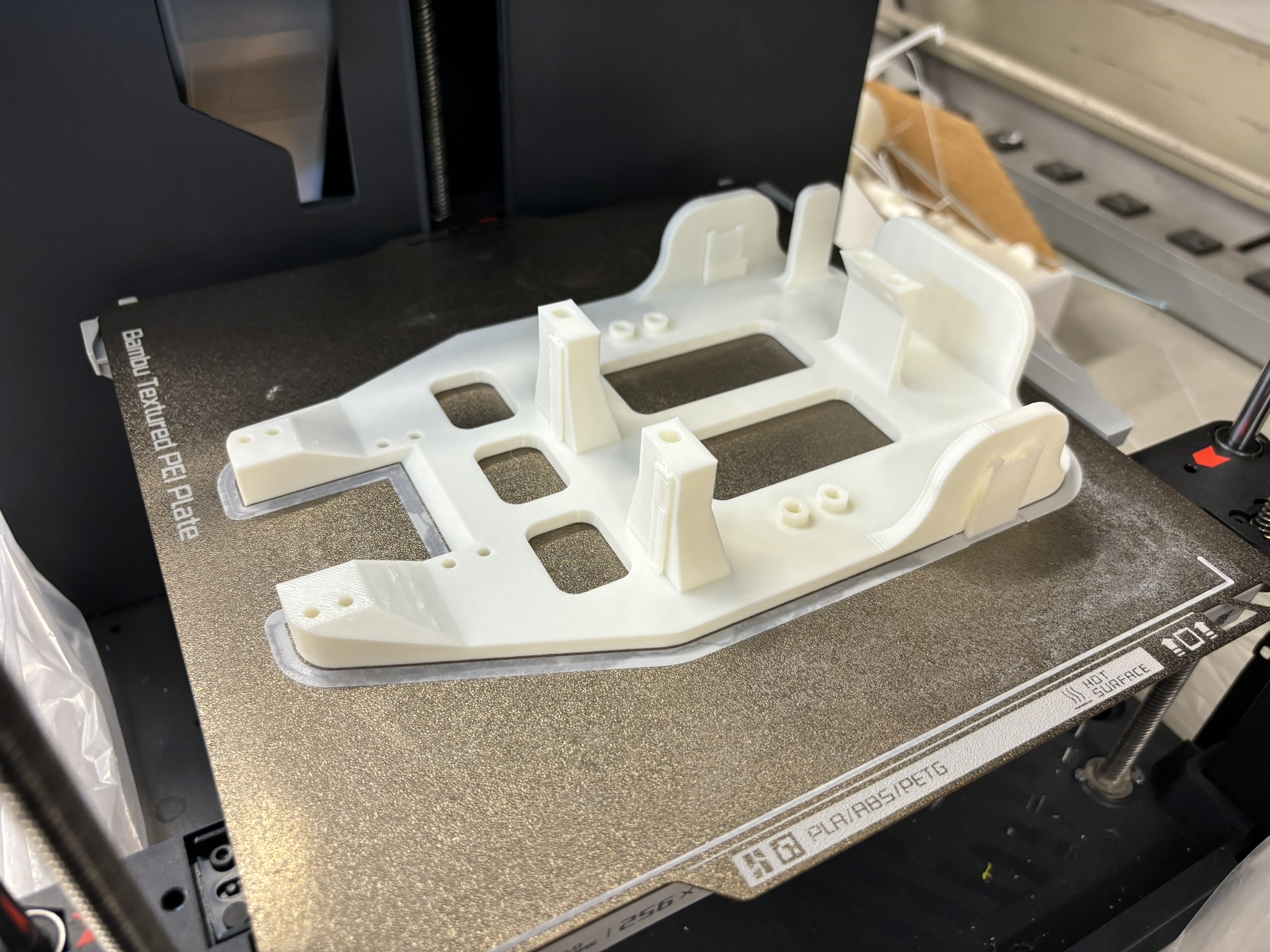

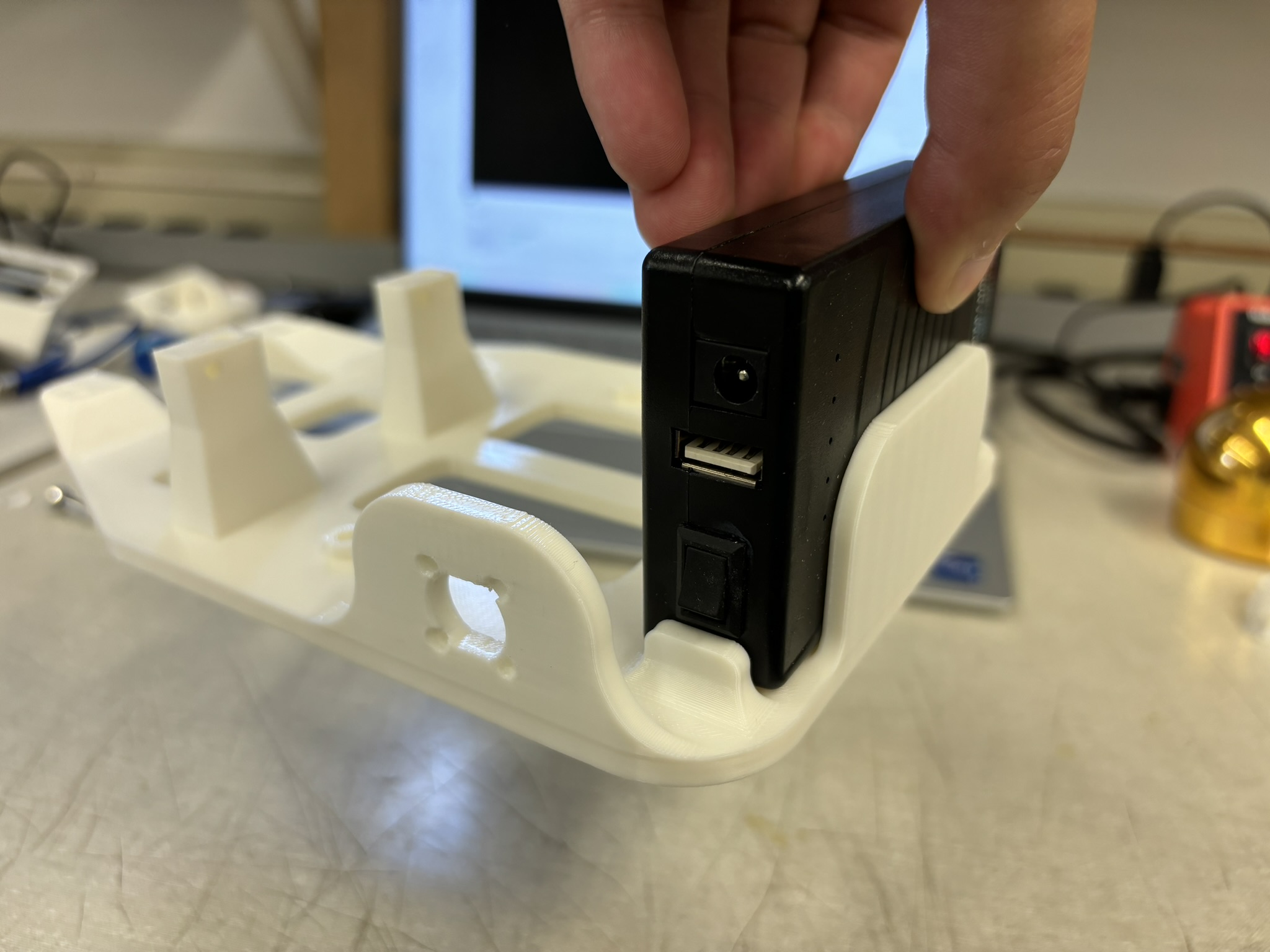

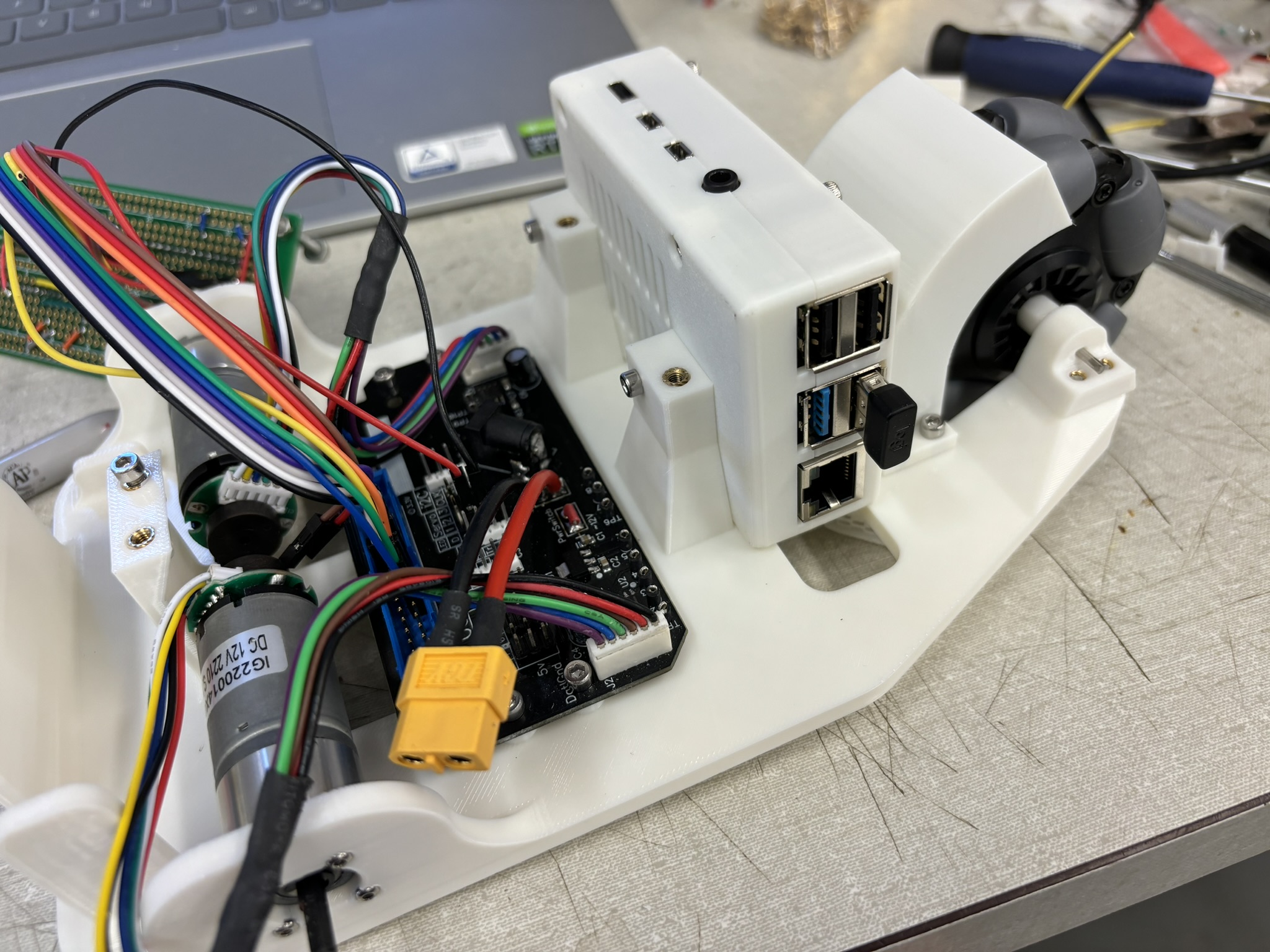

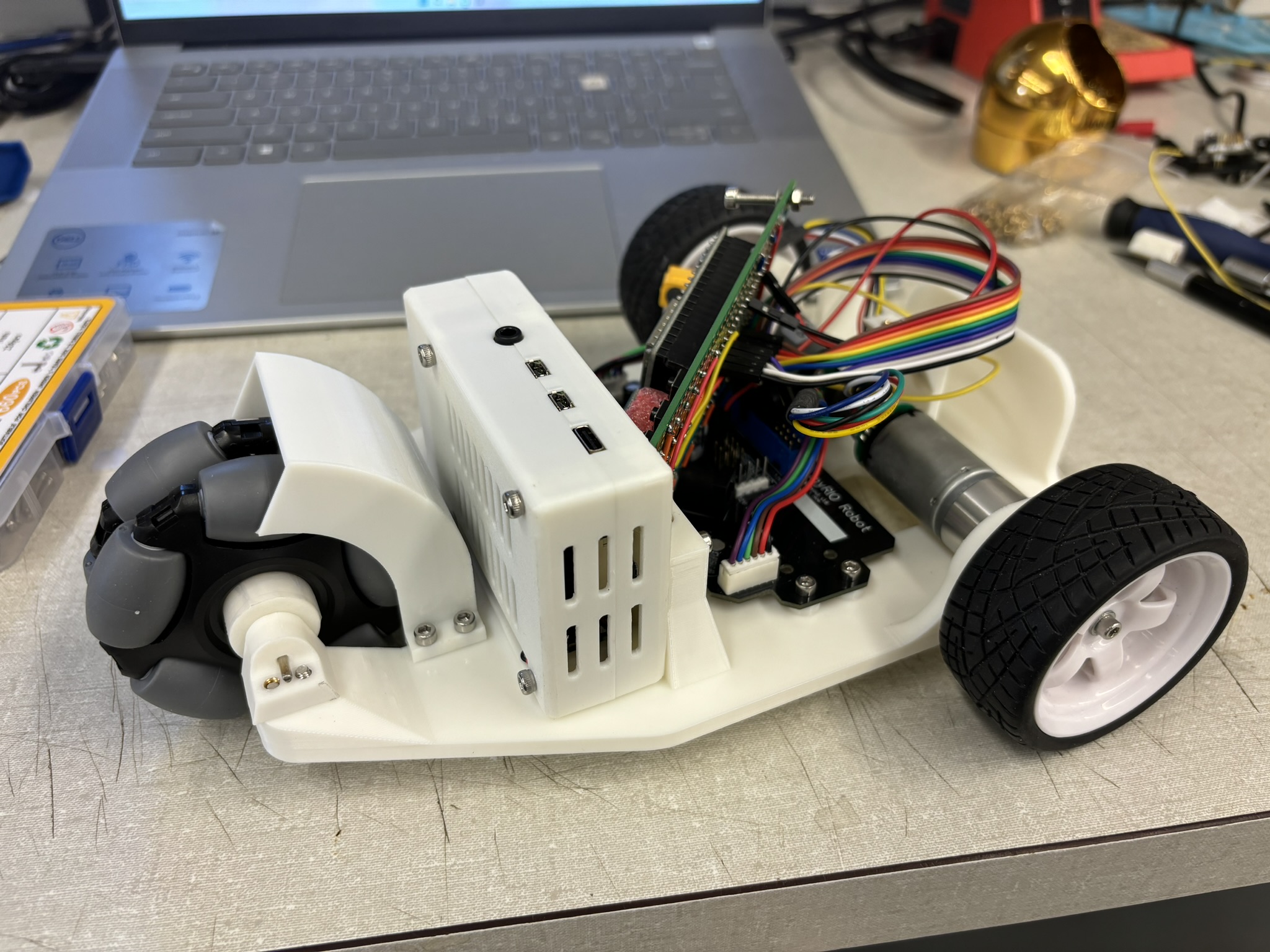

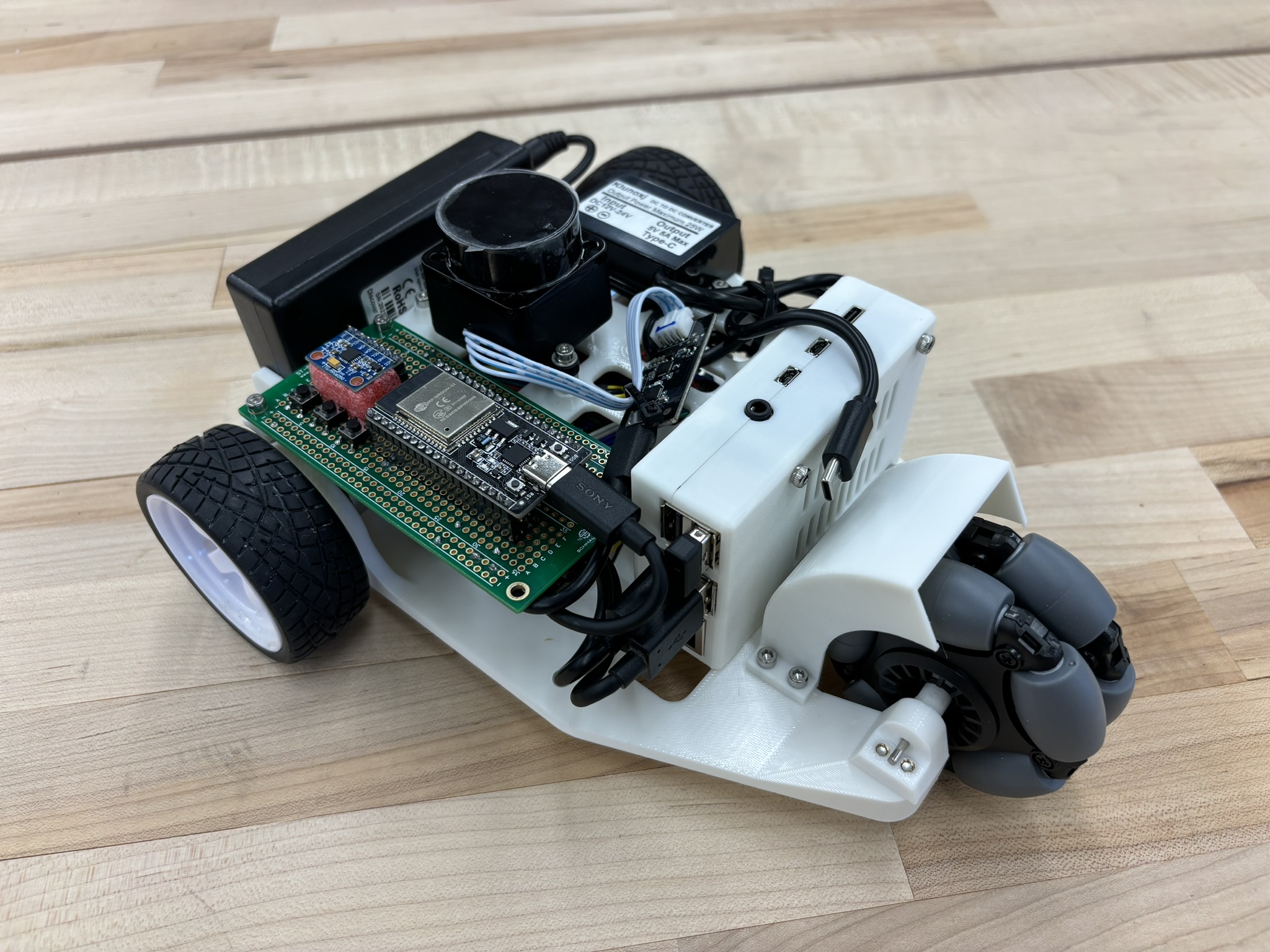

II. Build a Case for PI4B

III. ROS Learning

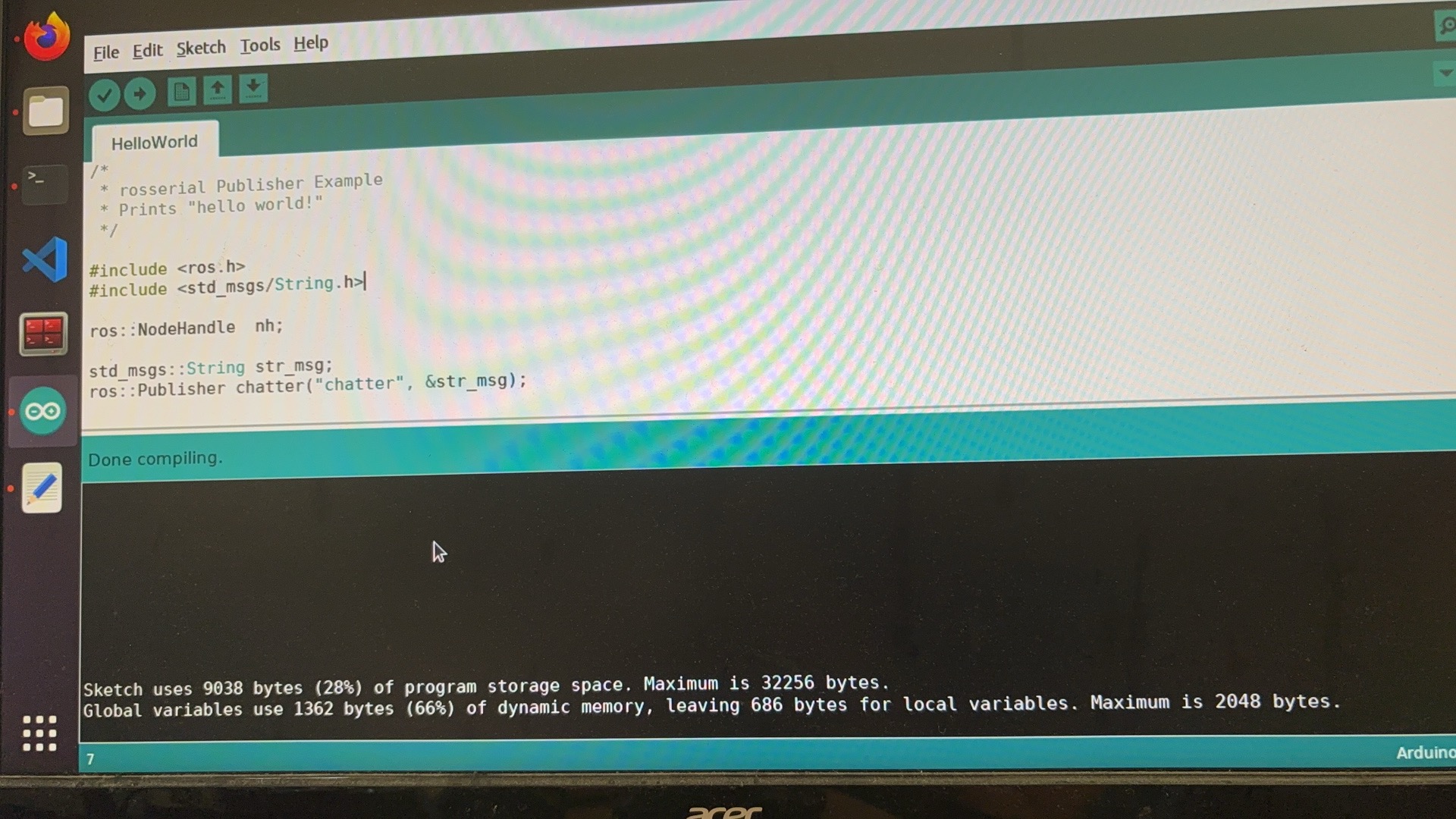

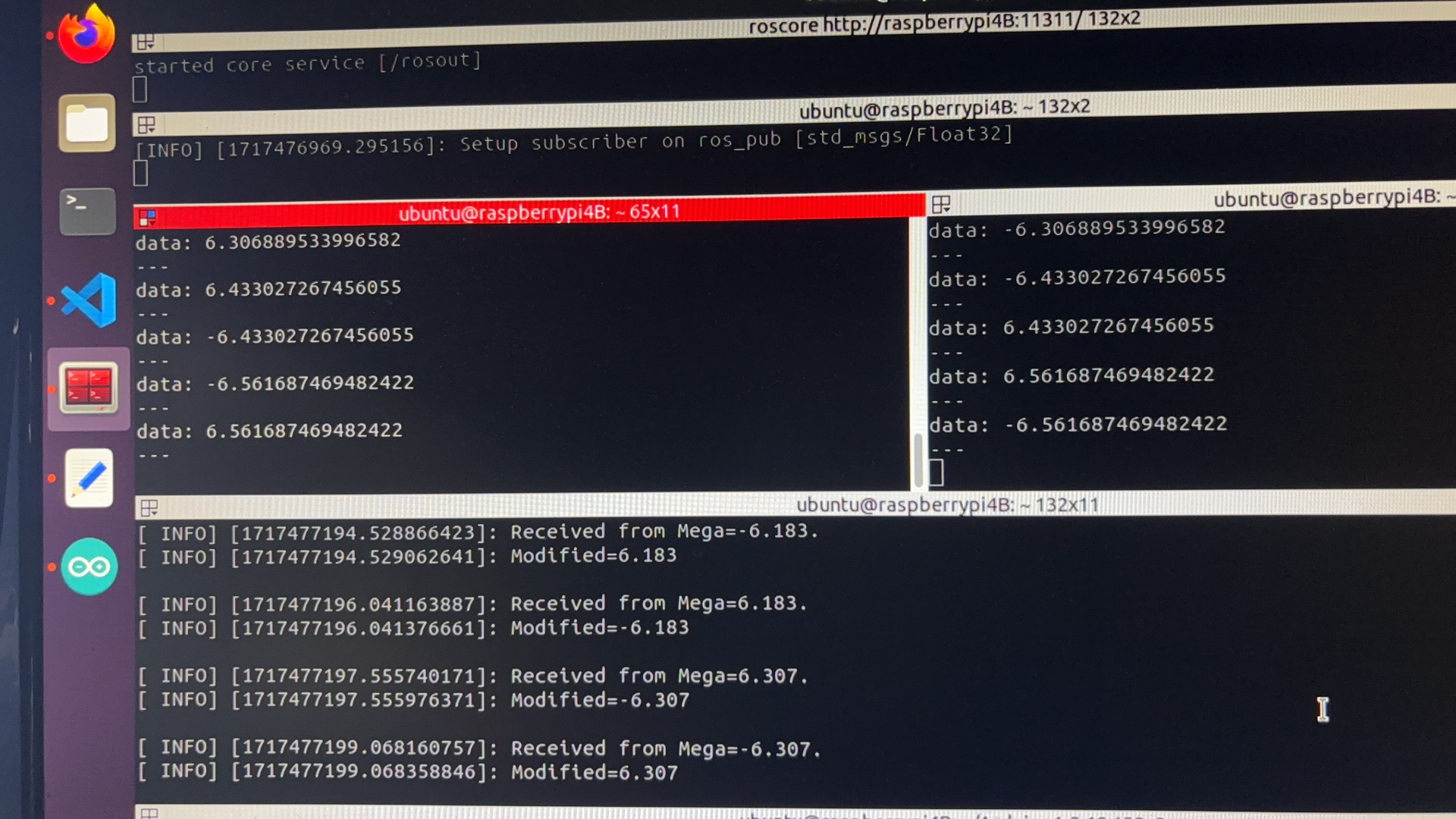

It took me about three days to figure out how to communicate between Arduino and ROS. All materials are on github, and my notes contain the question I encounter and their solutions.

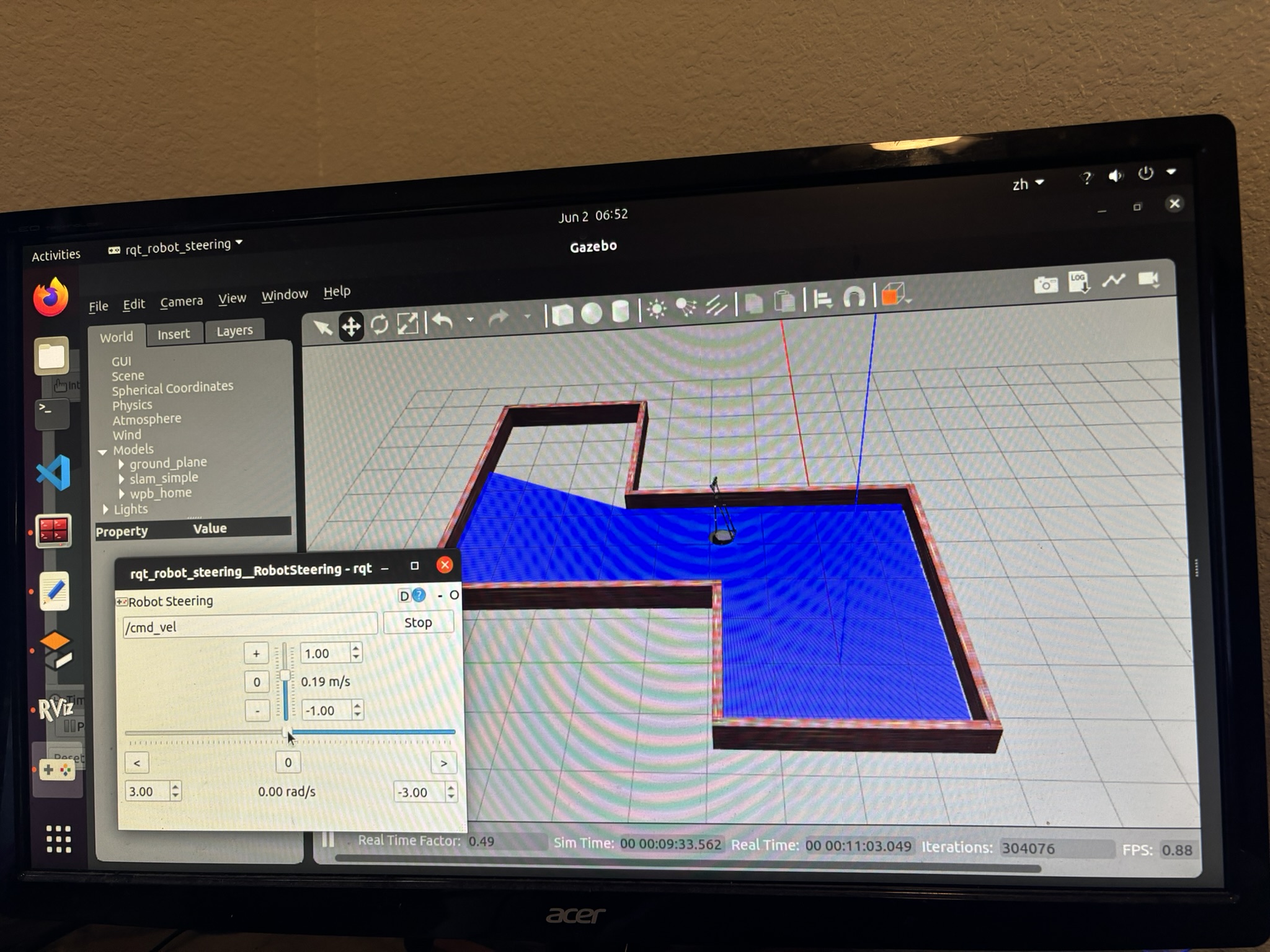

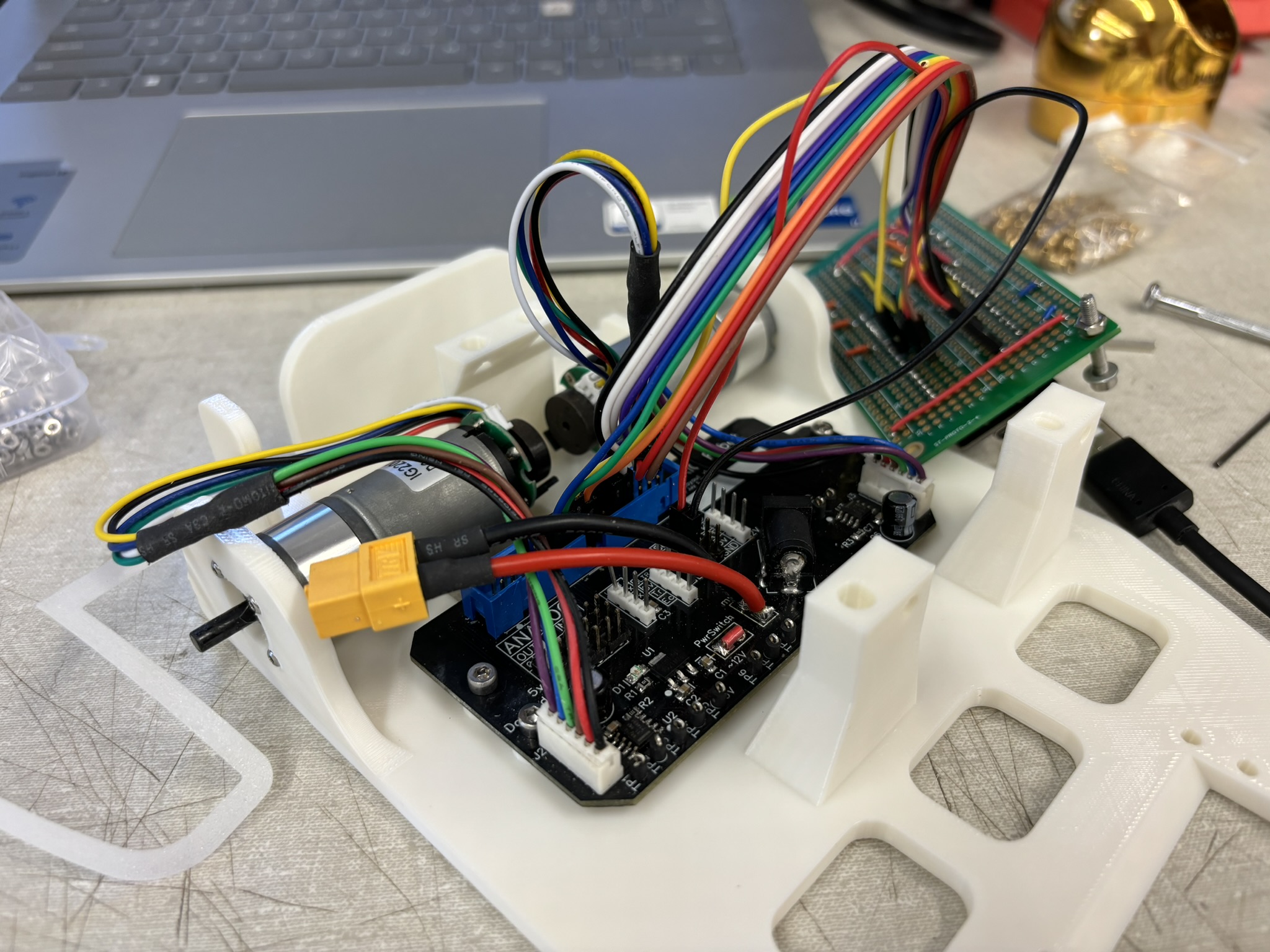

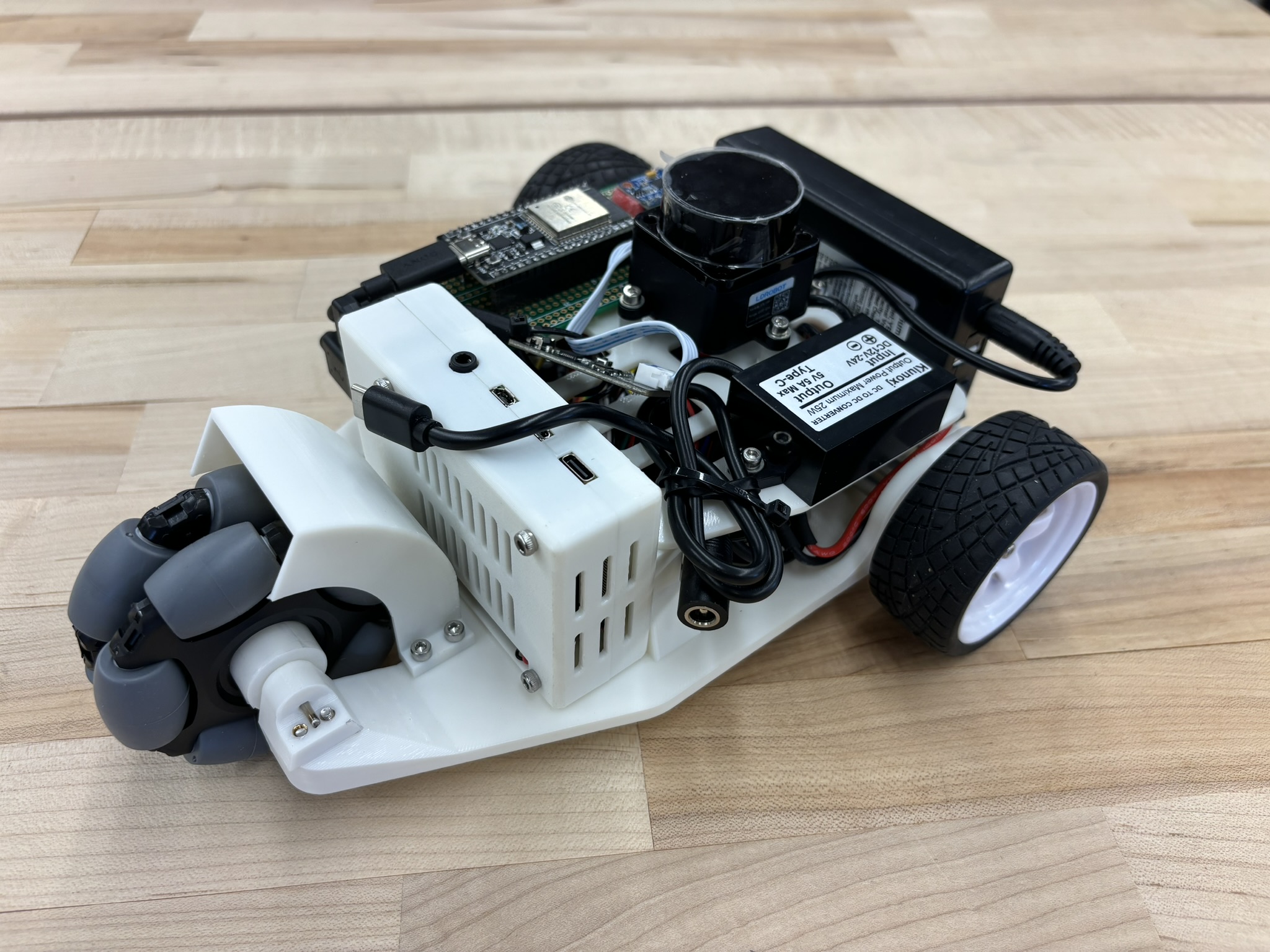

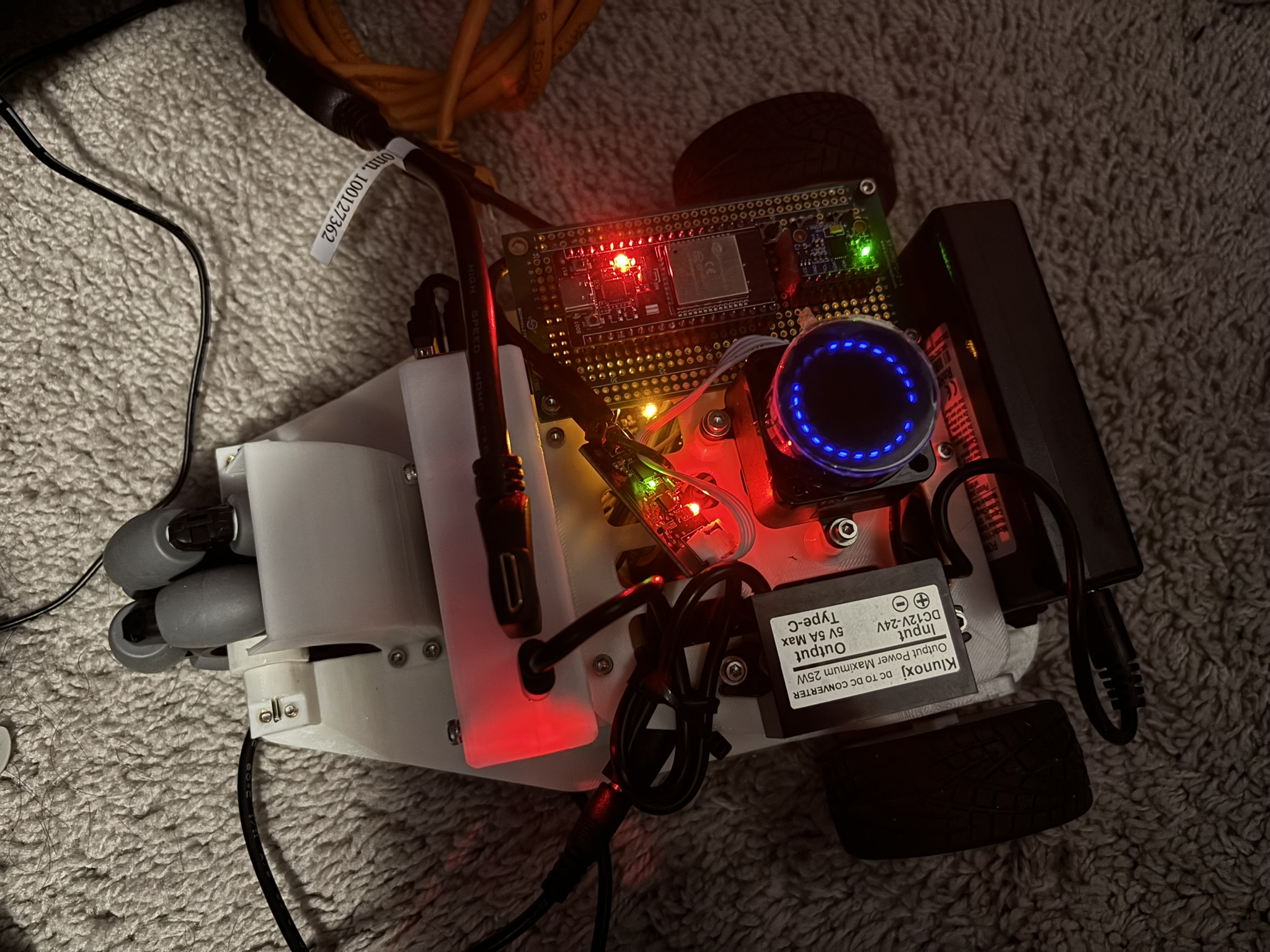

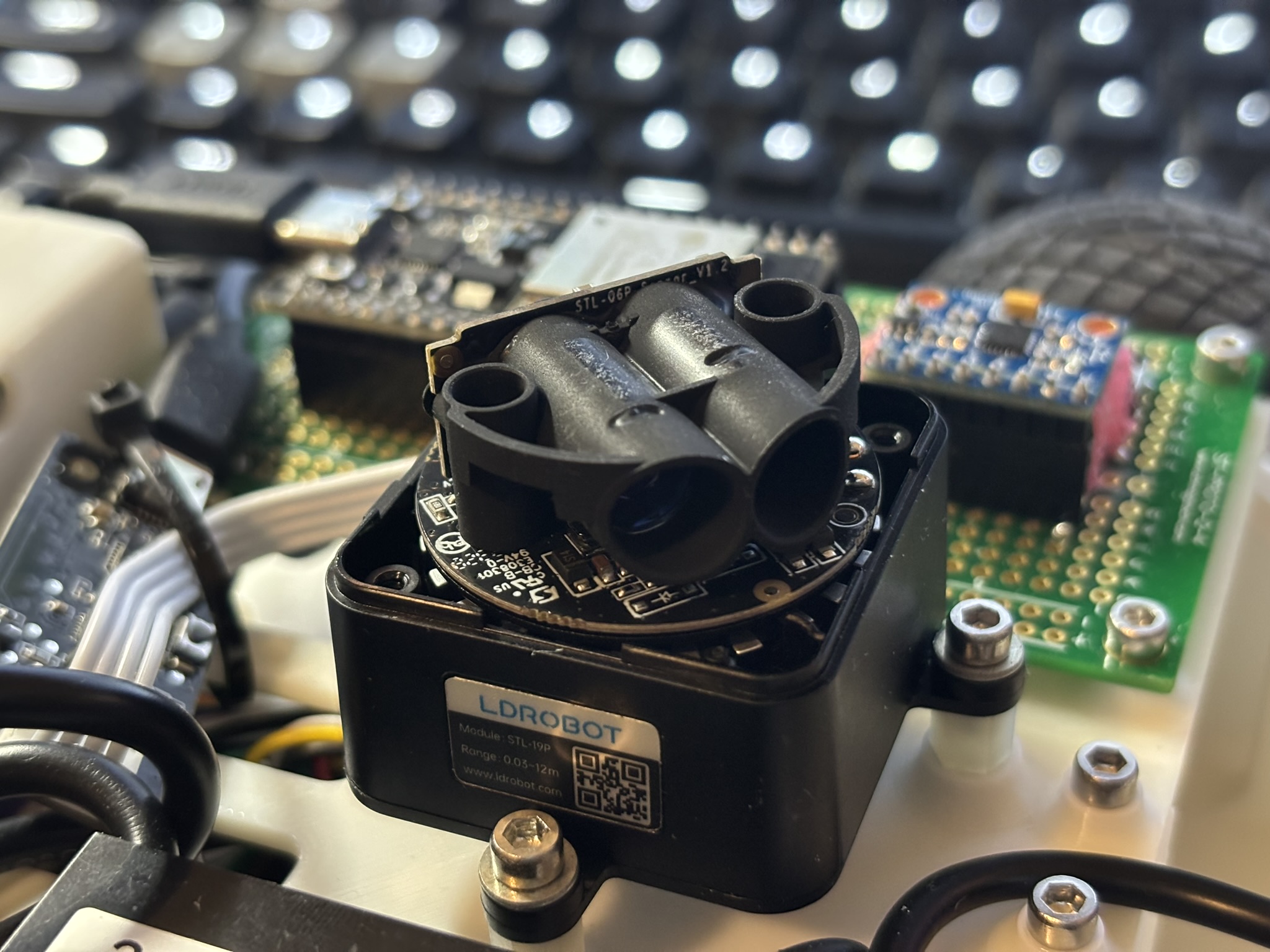

IV. Building the ROS Bot

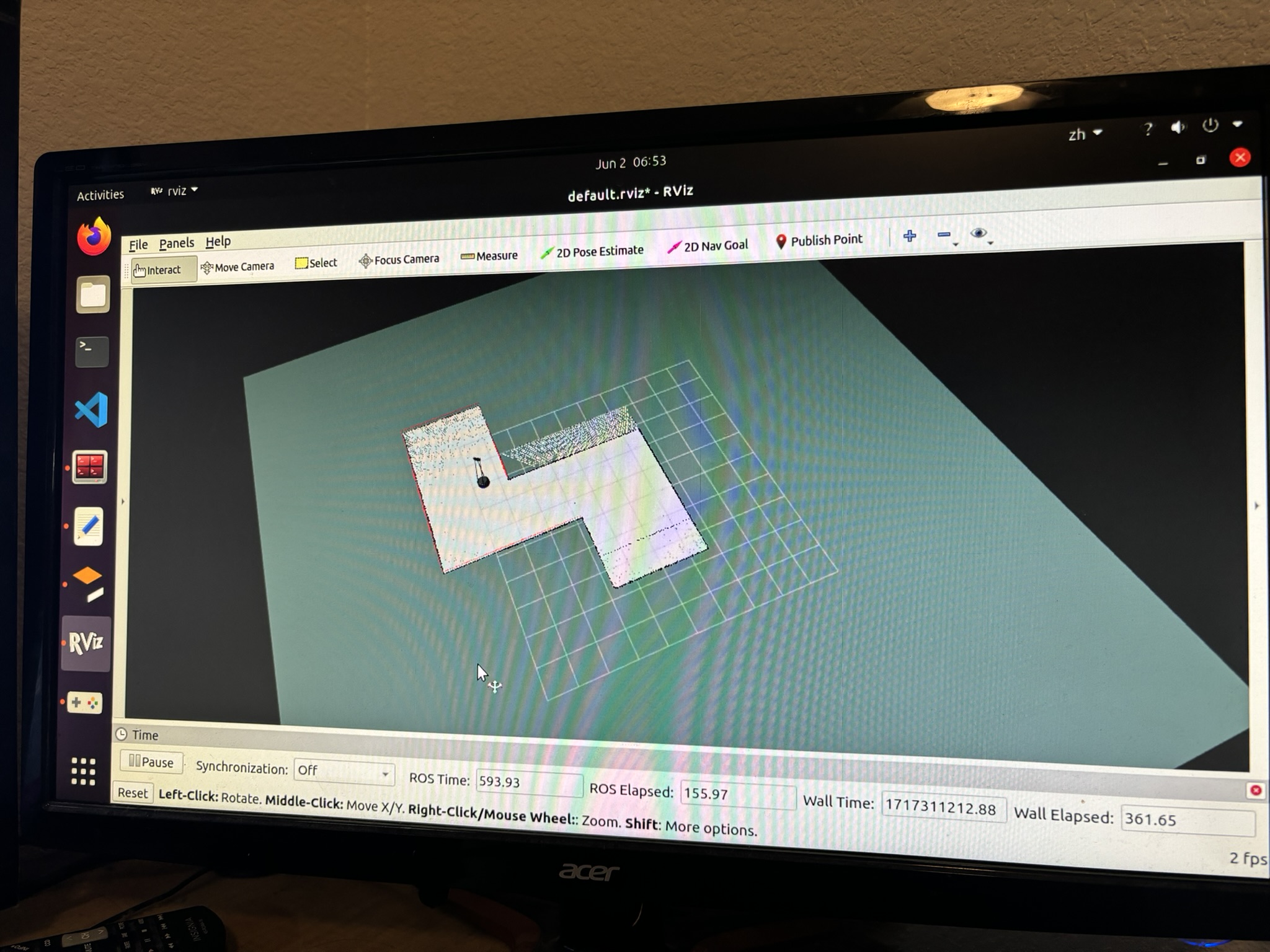

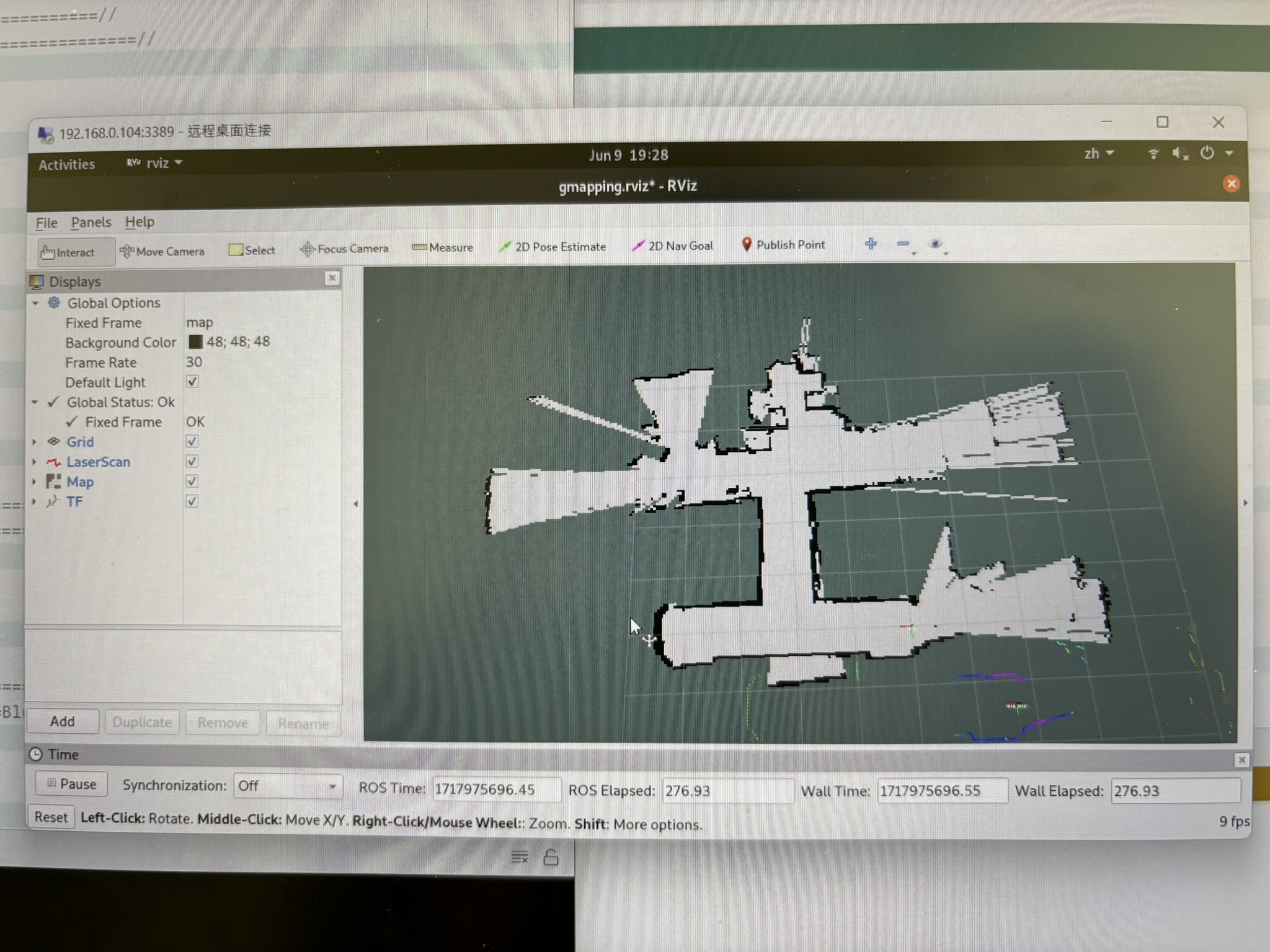

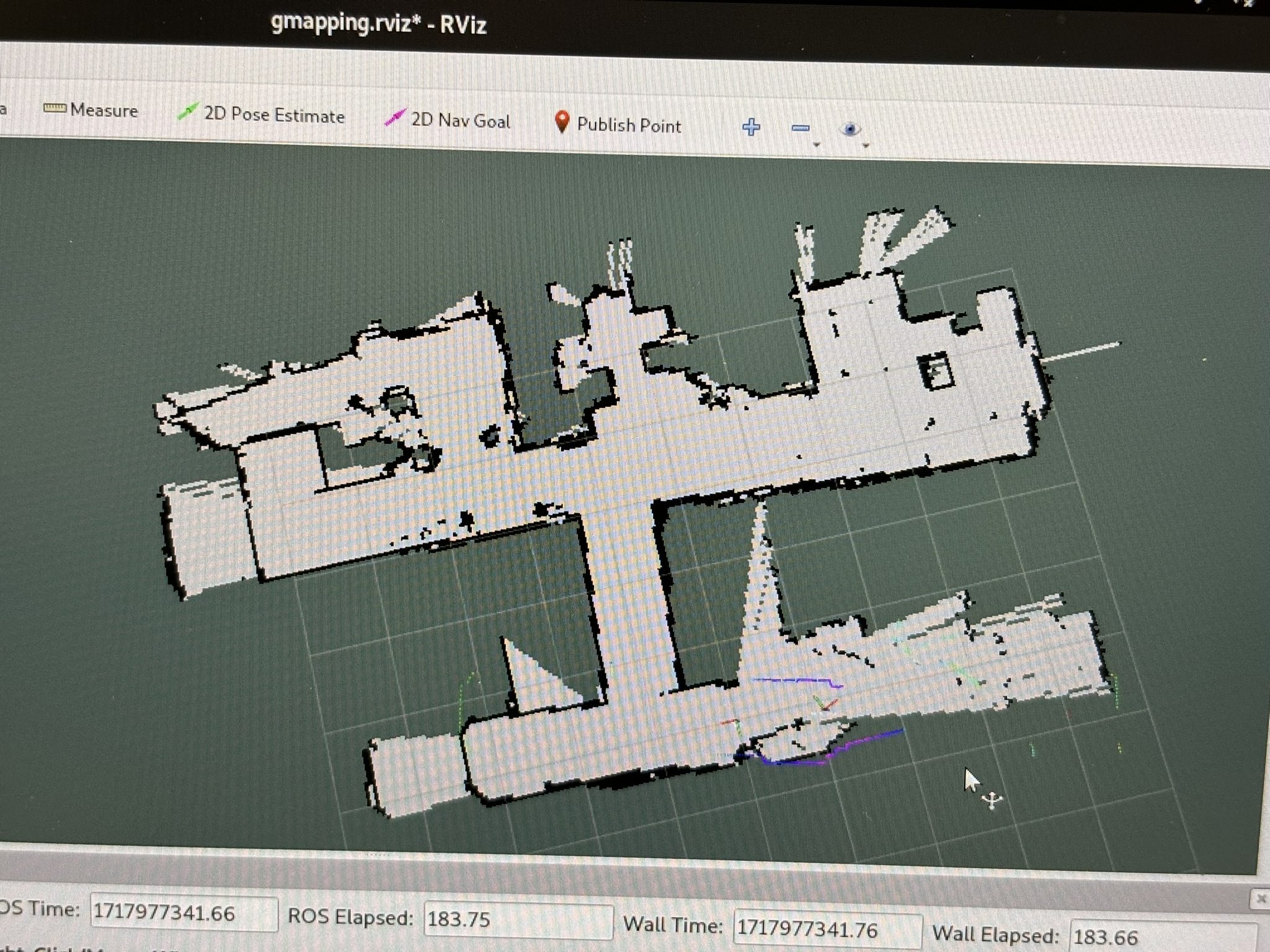

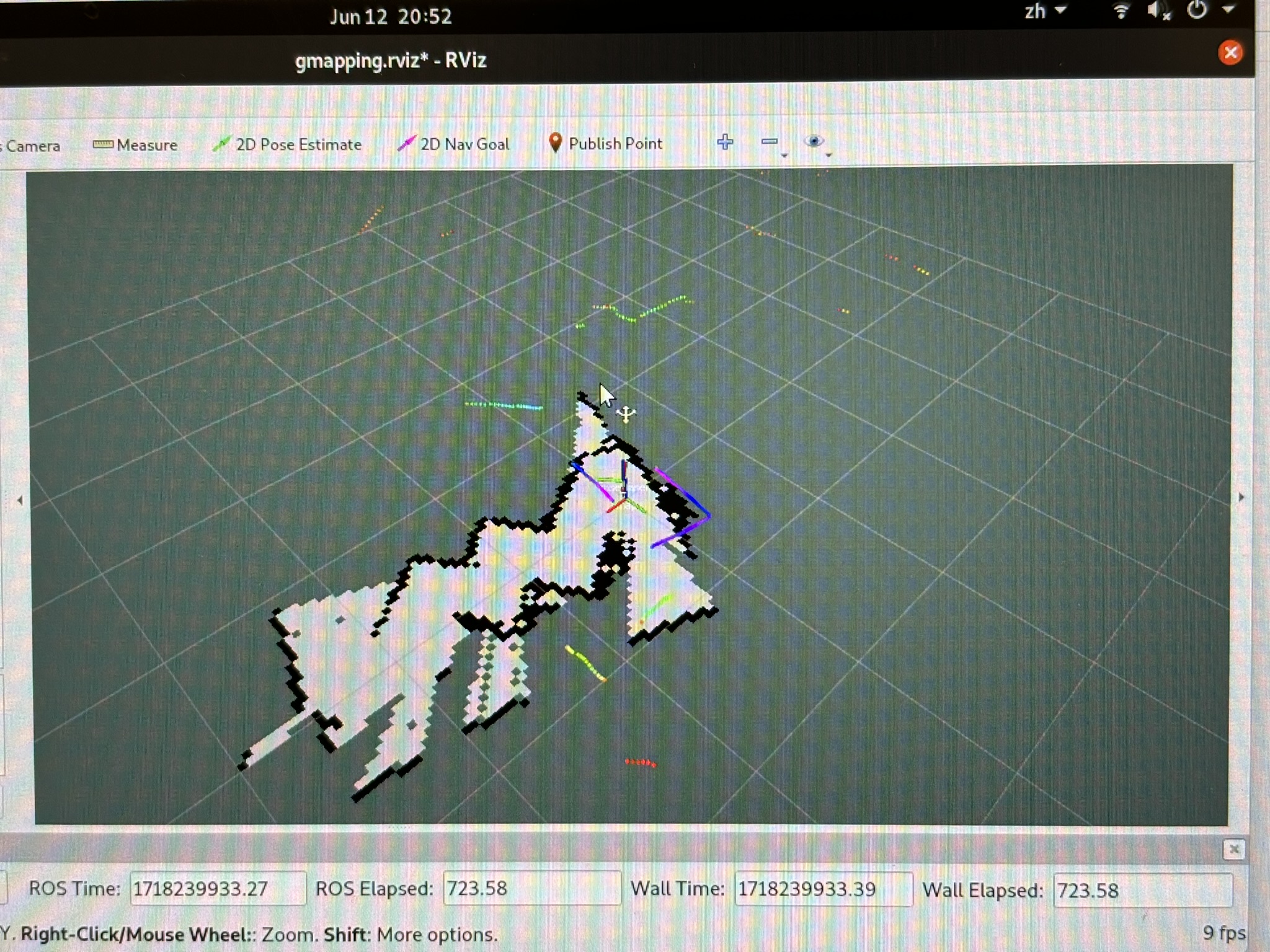

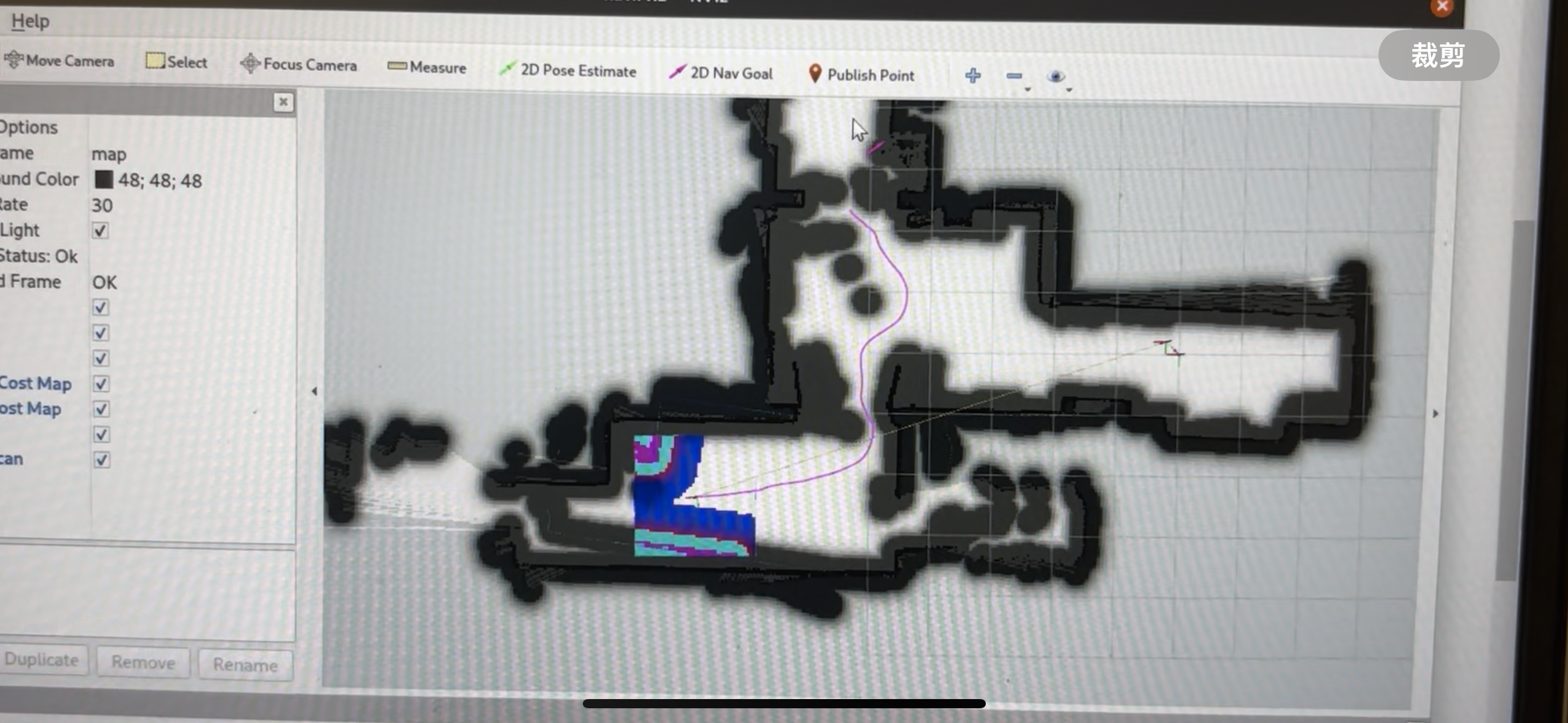

V. SLAM and My Apartment

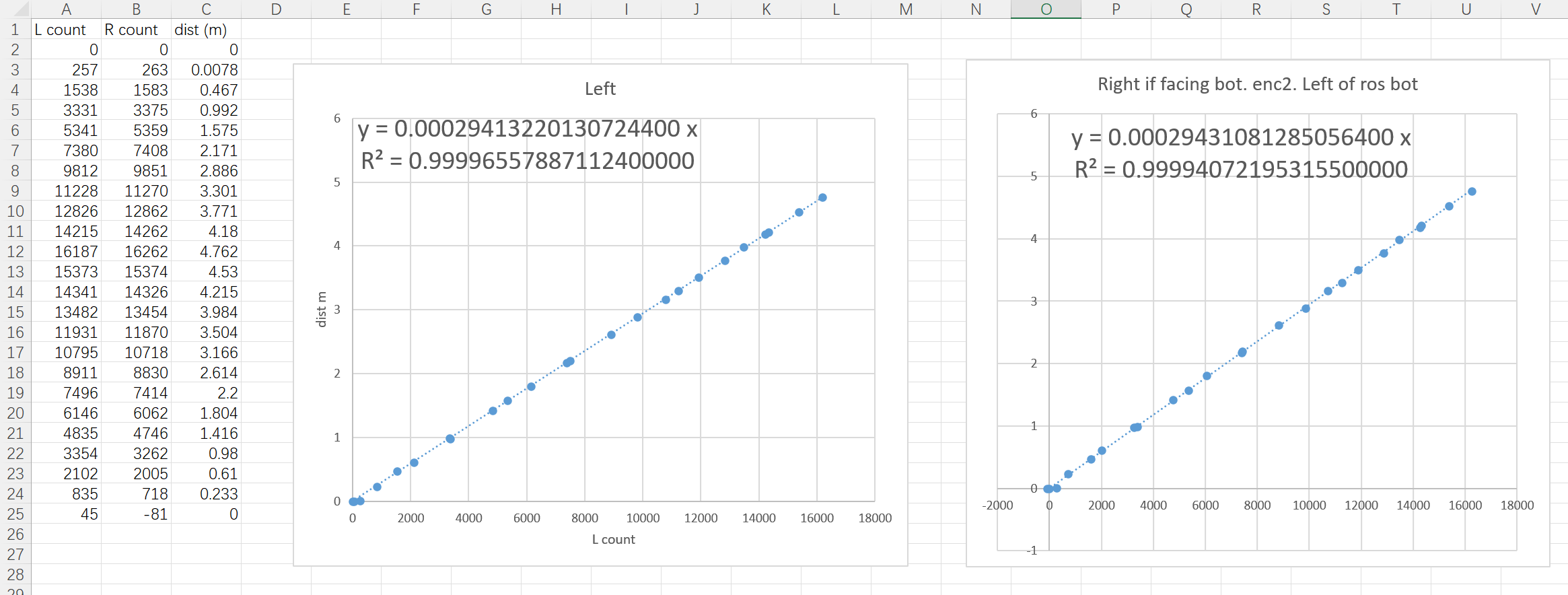

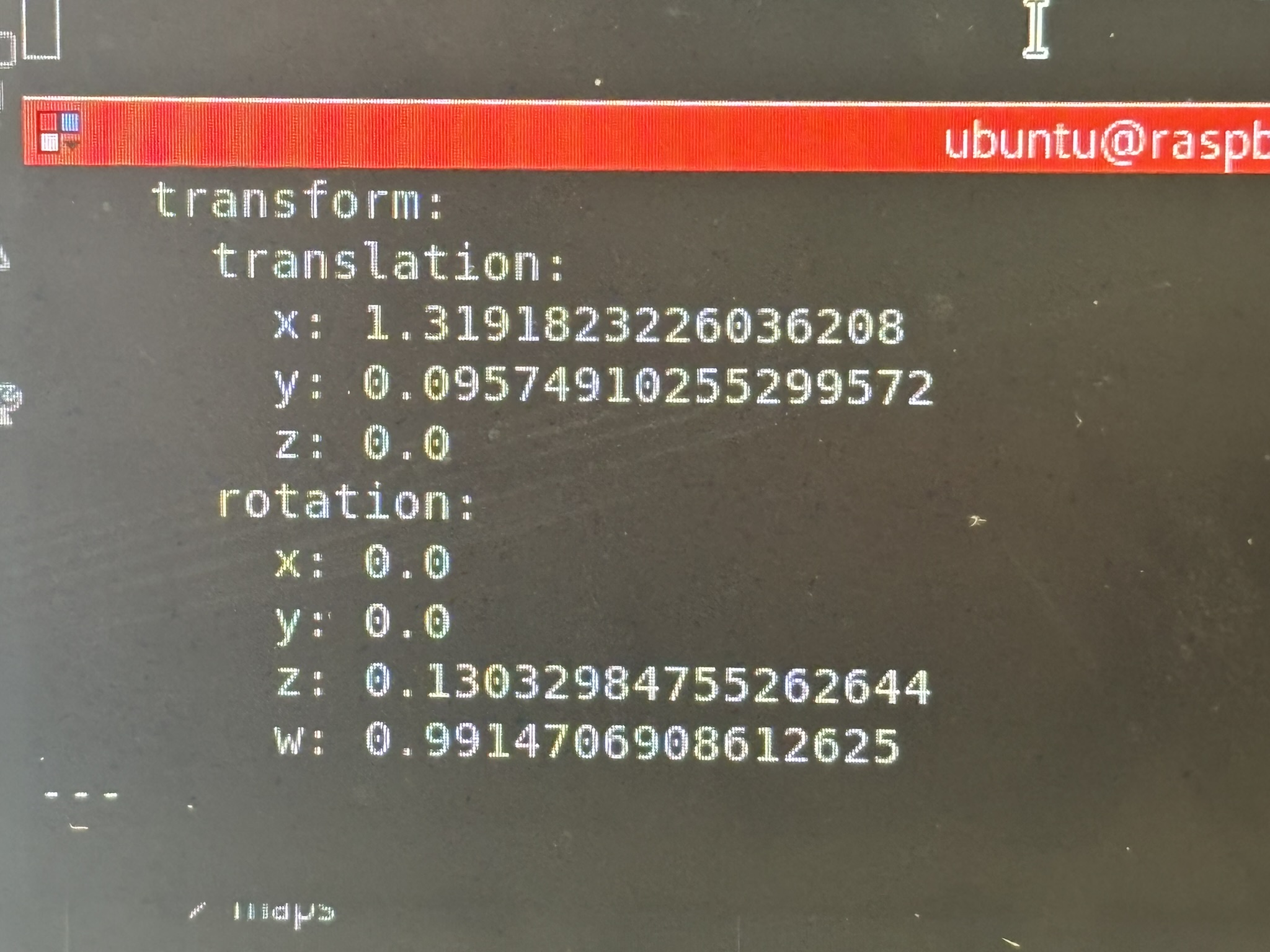

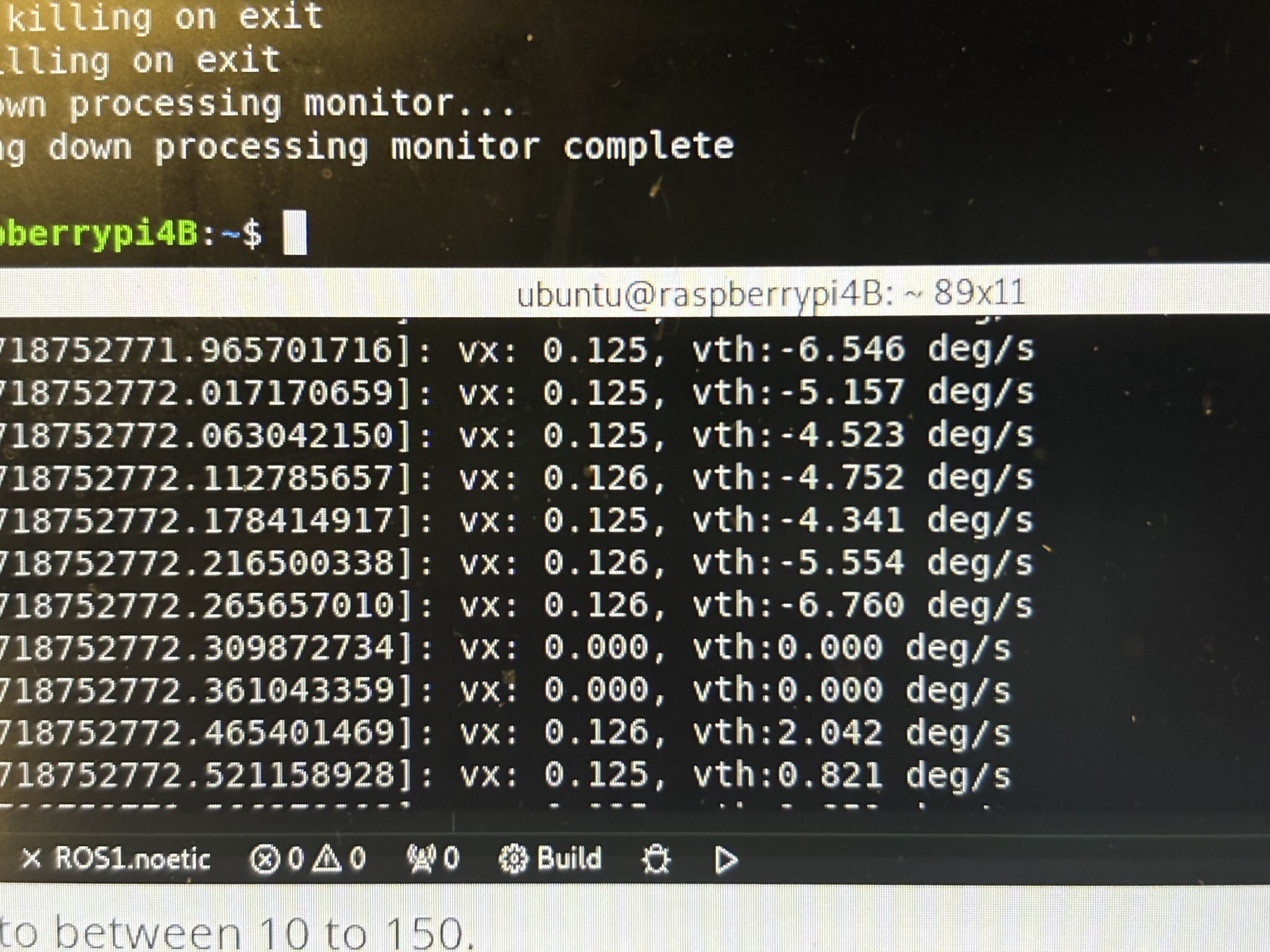

ROS has a sample odometry code, so I modified it.

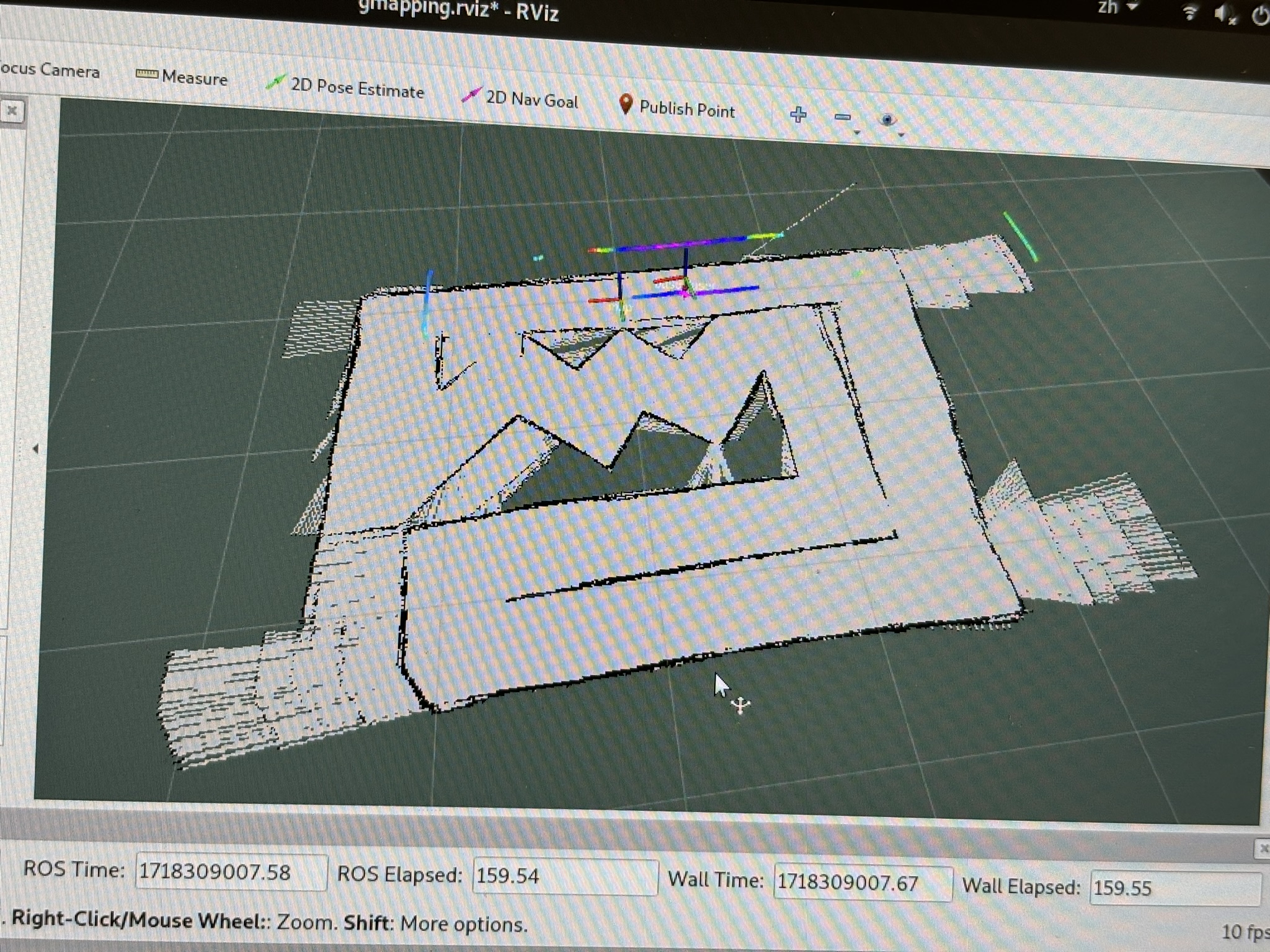

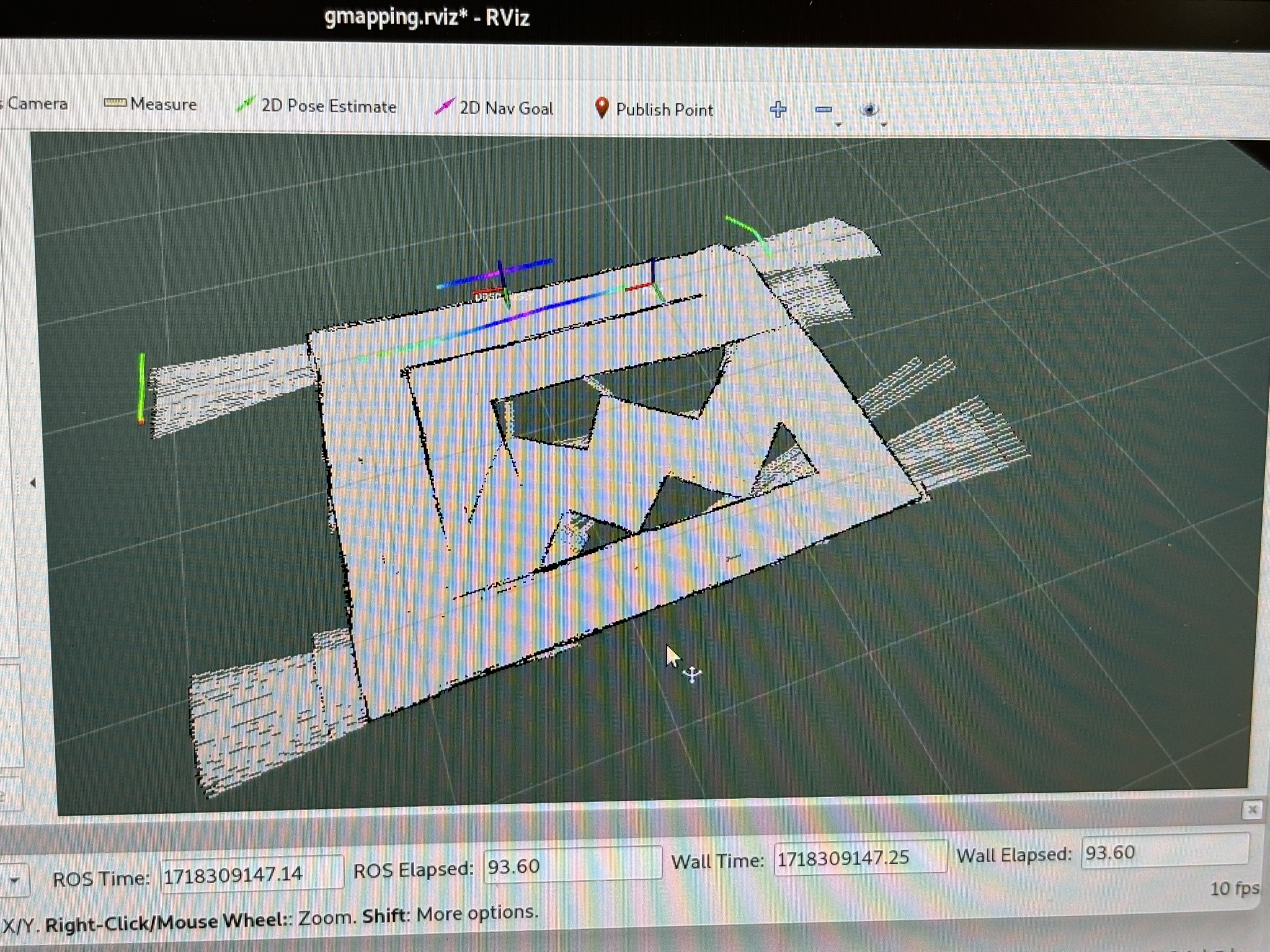

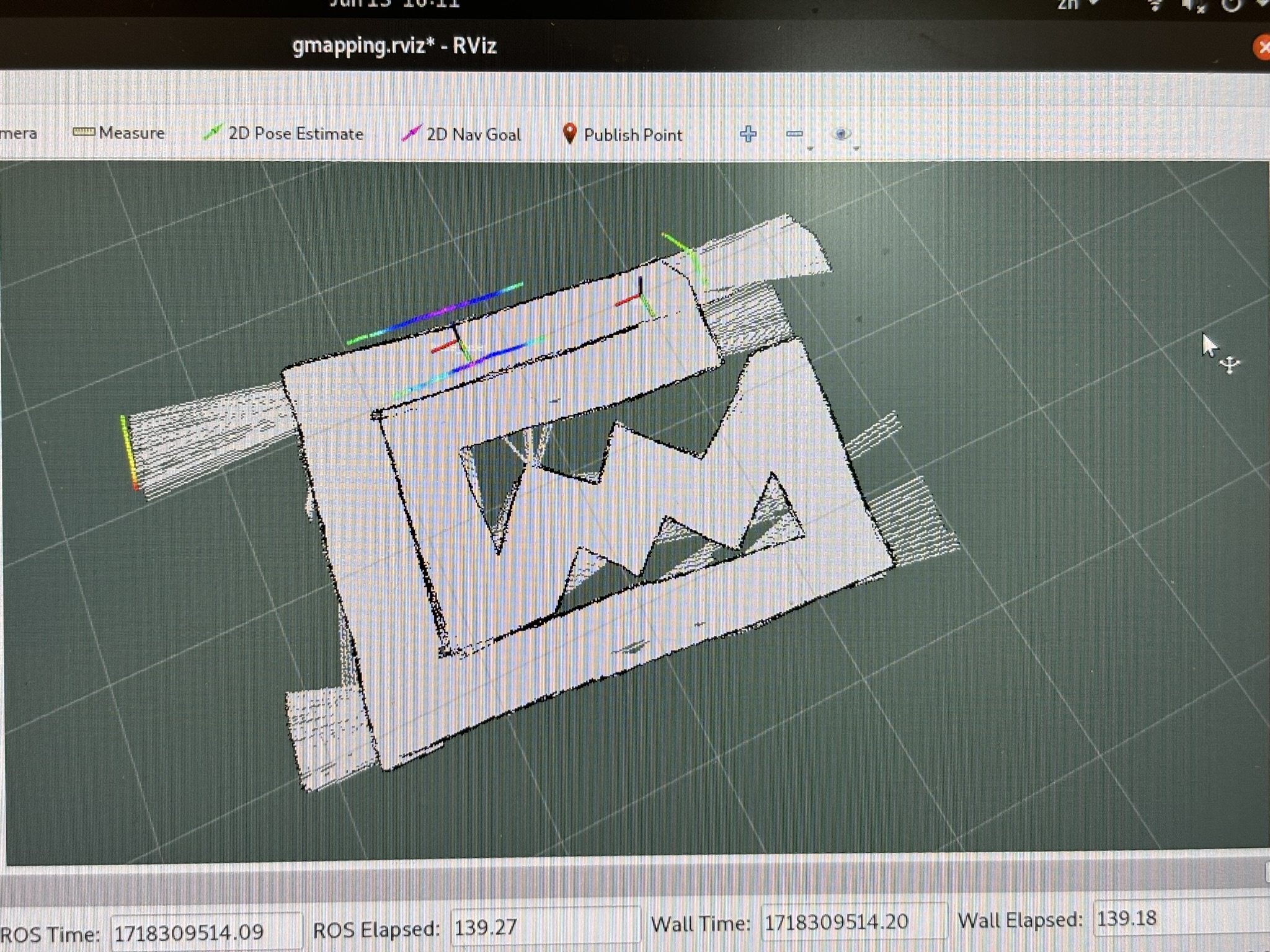

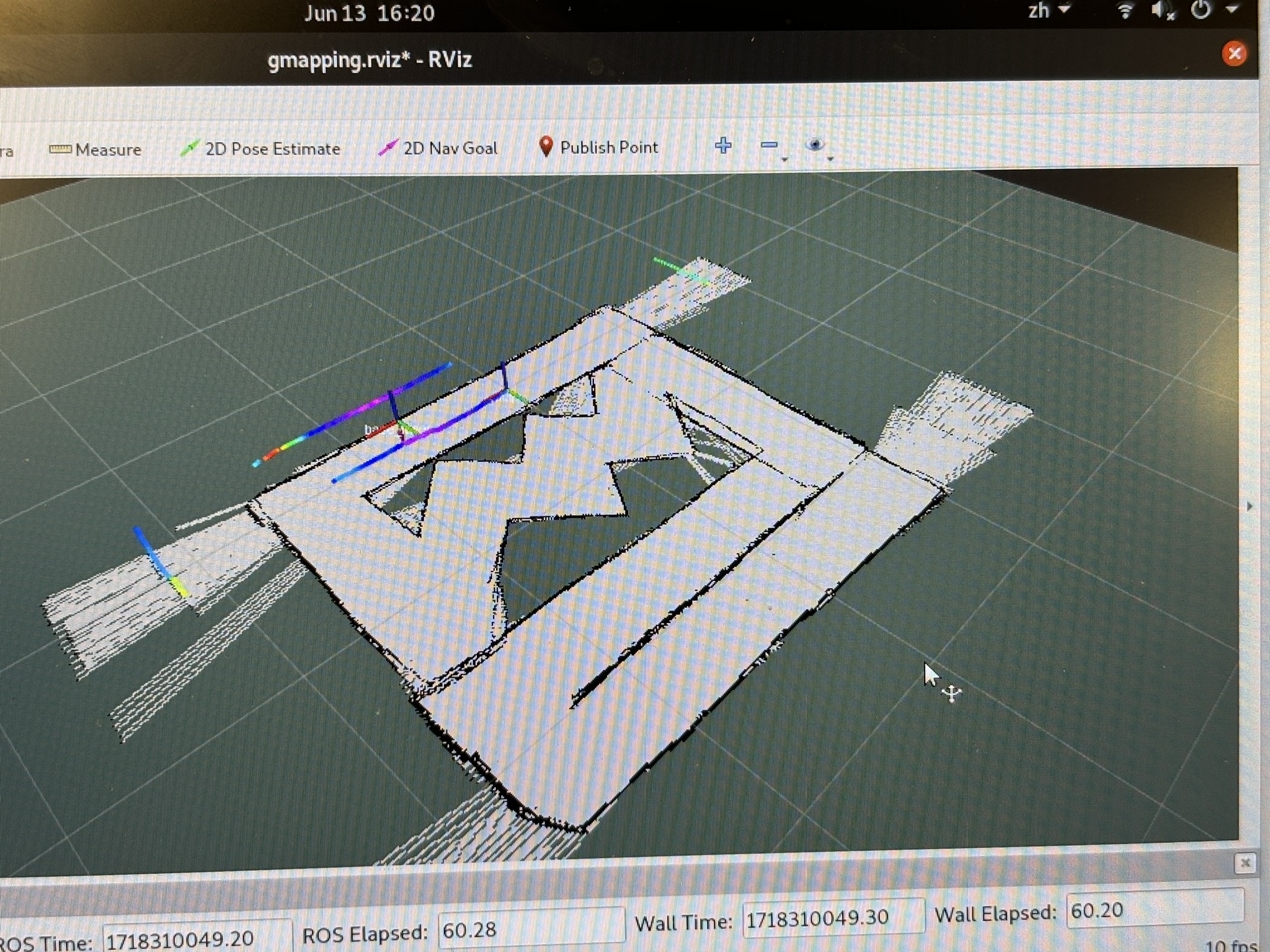

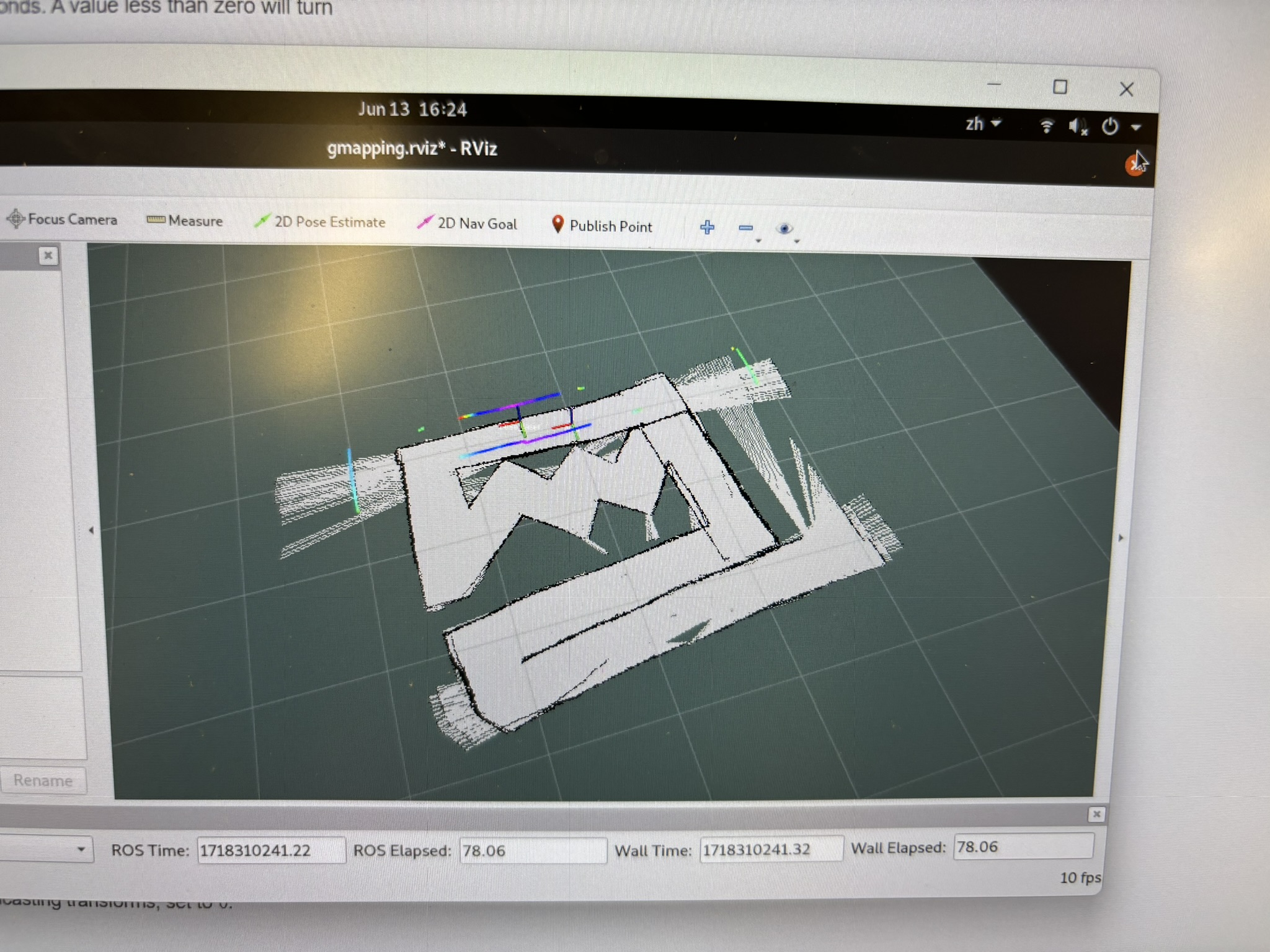

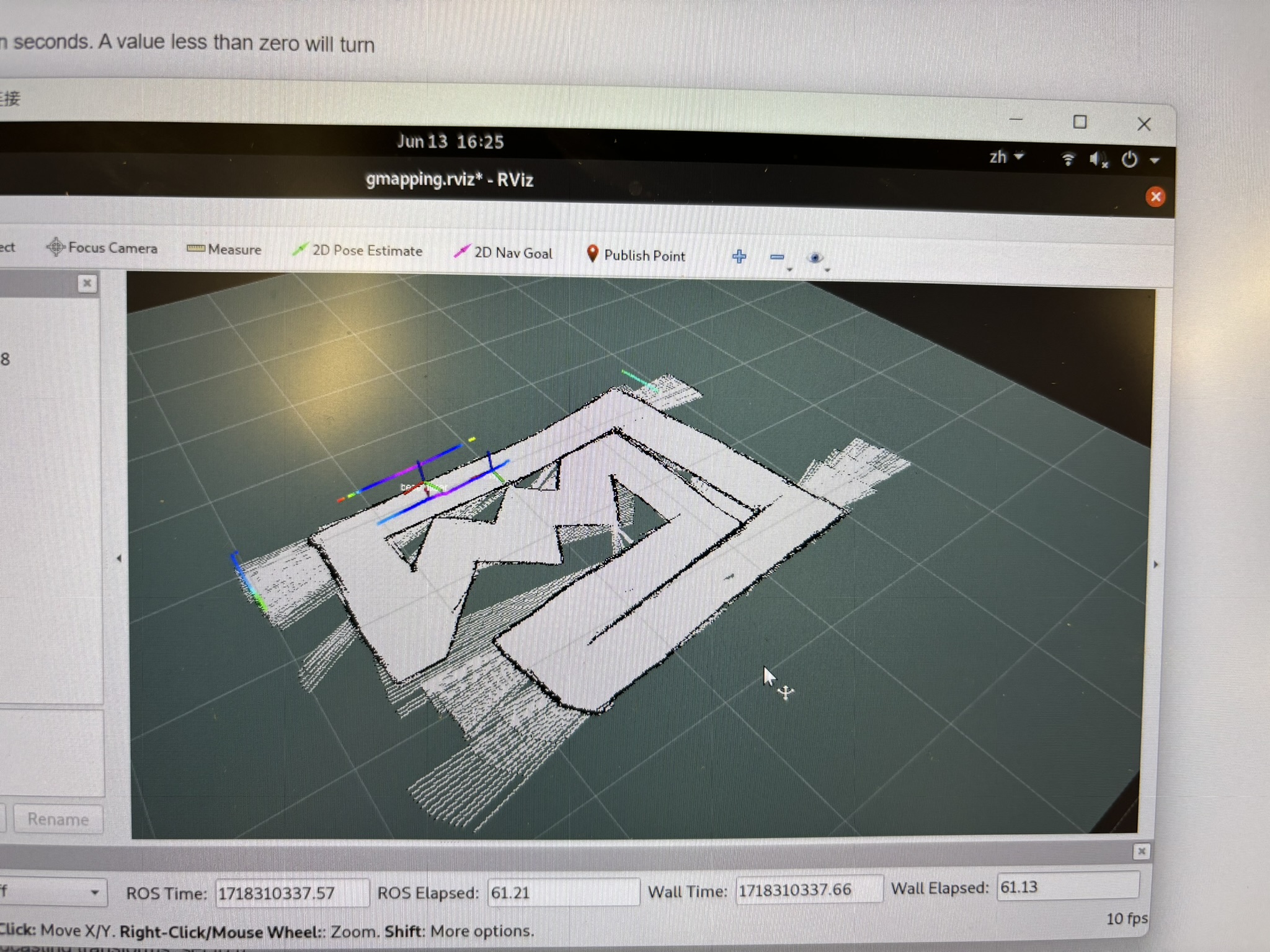

VI. SLAM and the Racing Track

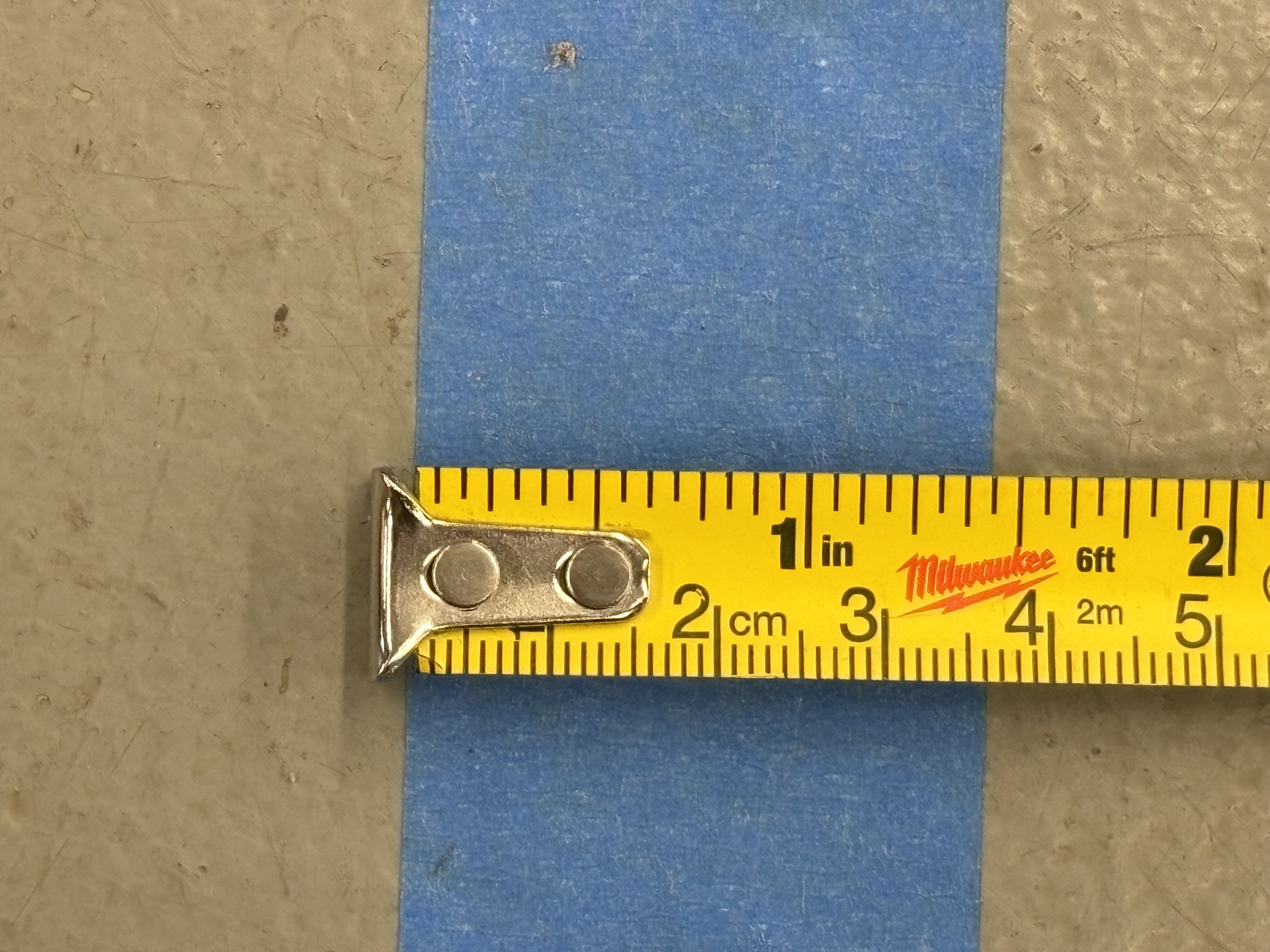

I built the same racing track as I raced in ME444.

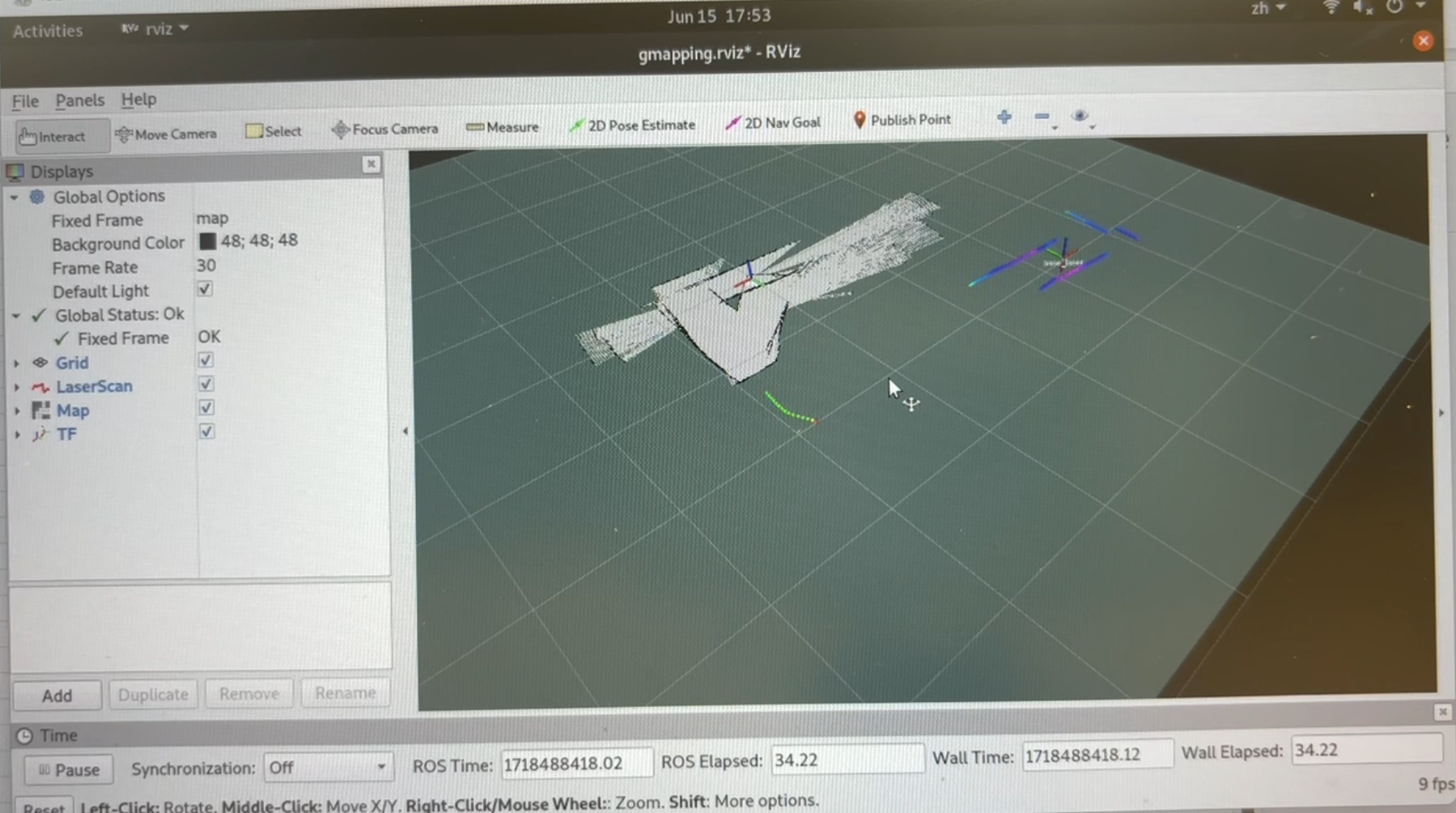

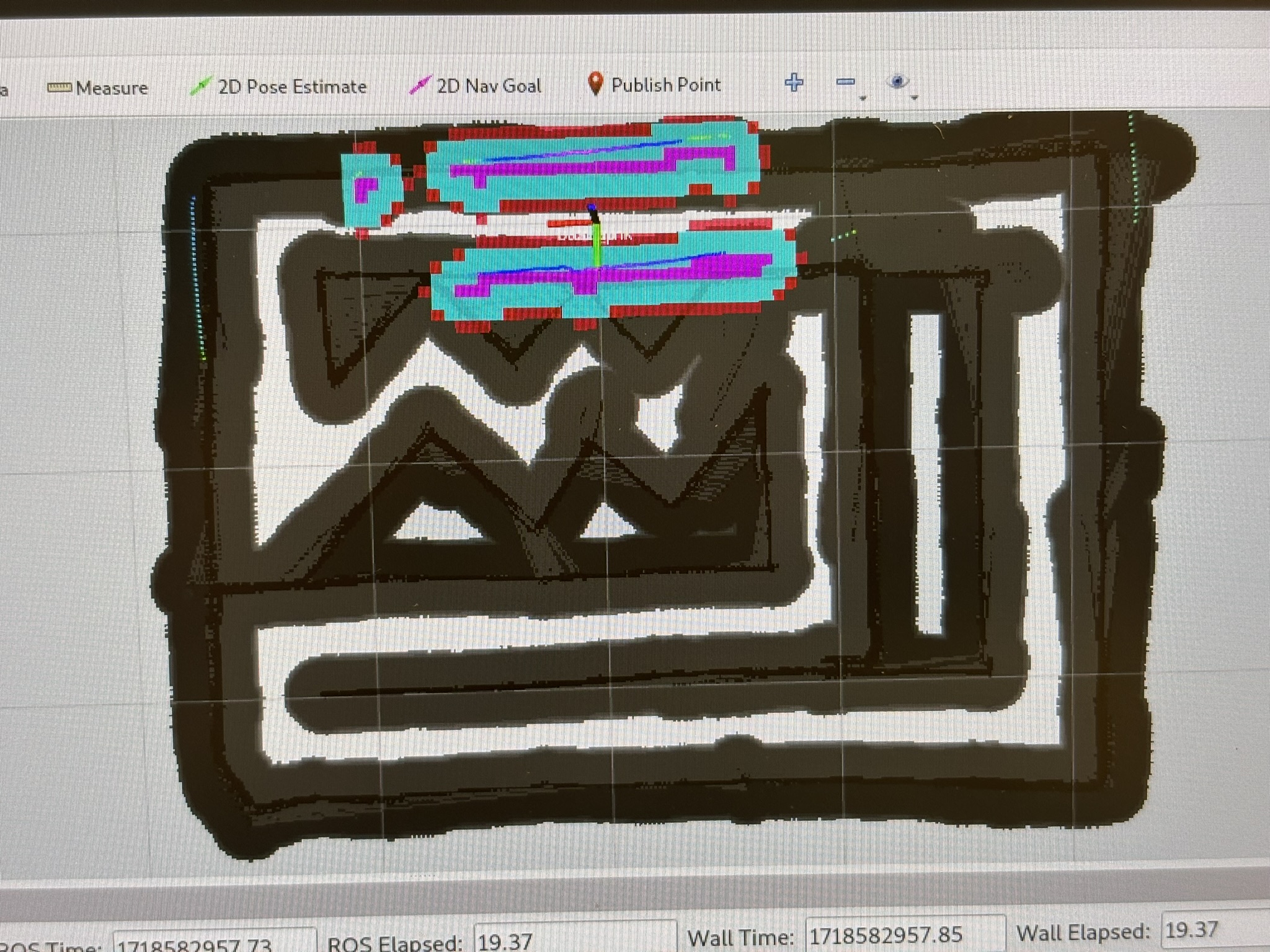

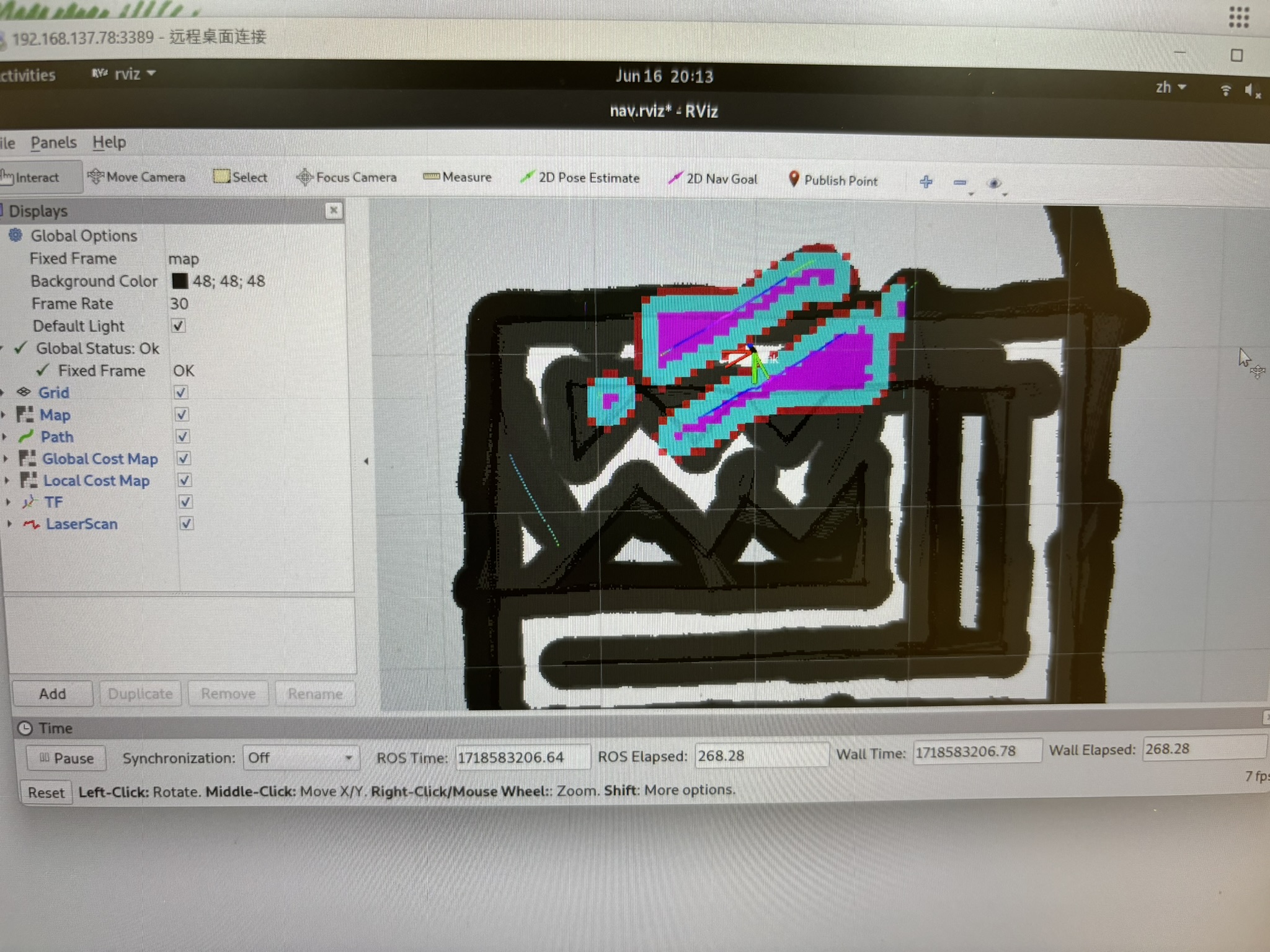

Gmapping doesn’t trust the odometry. This causes the map can’t be generated correctly.

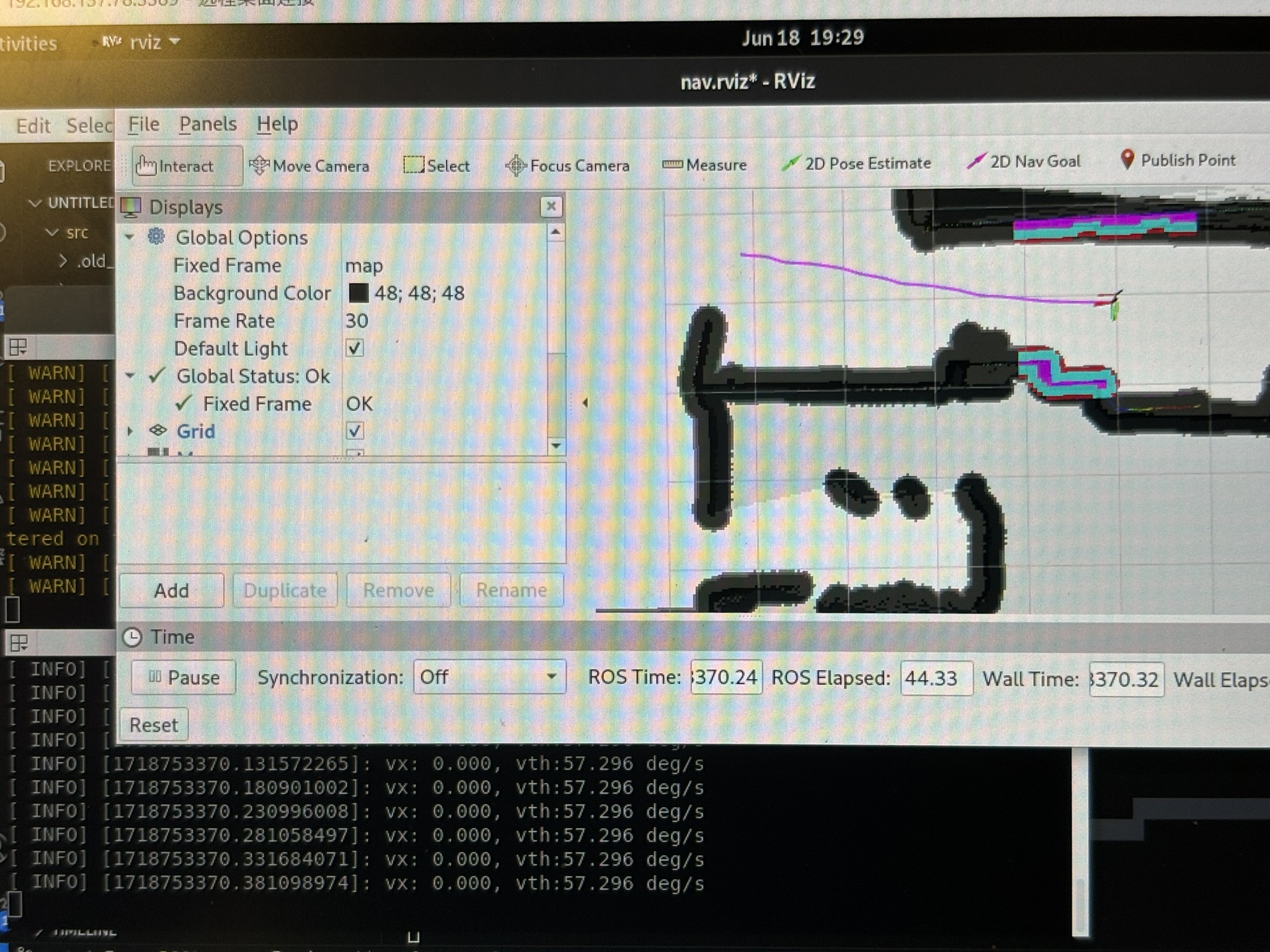

VII. Navigation

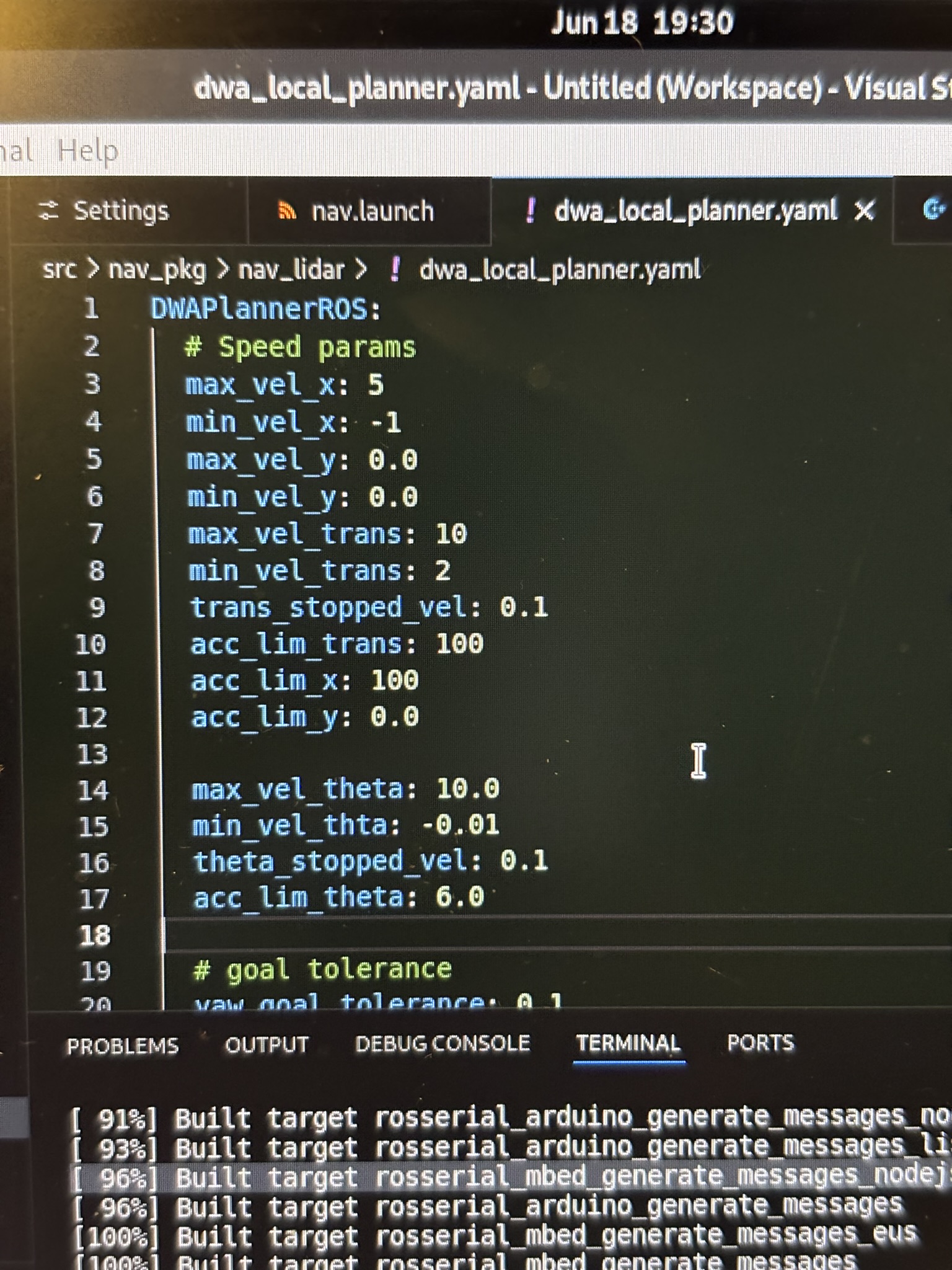

I had to increase the inflation radius and the robot radius by a lot, so DWA wouldn’t smash the bot into the wall. DWA had zero successful runs.

VIII. Conclusion

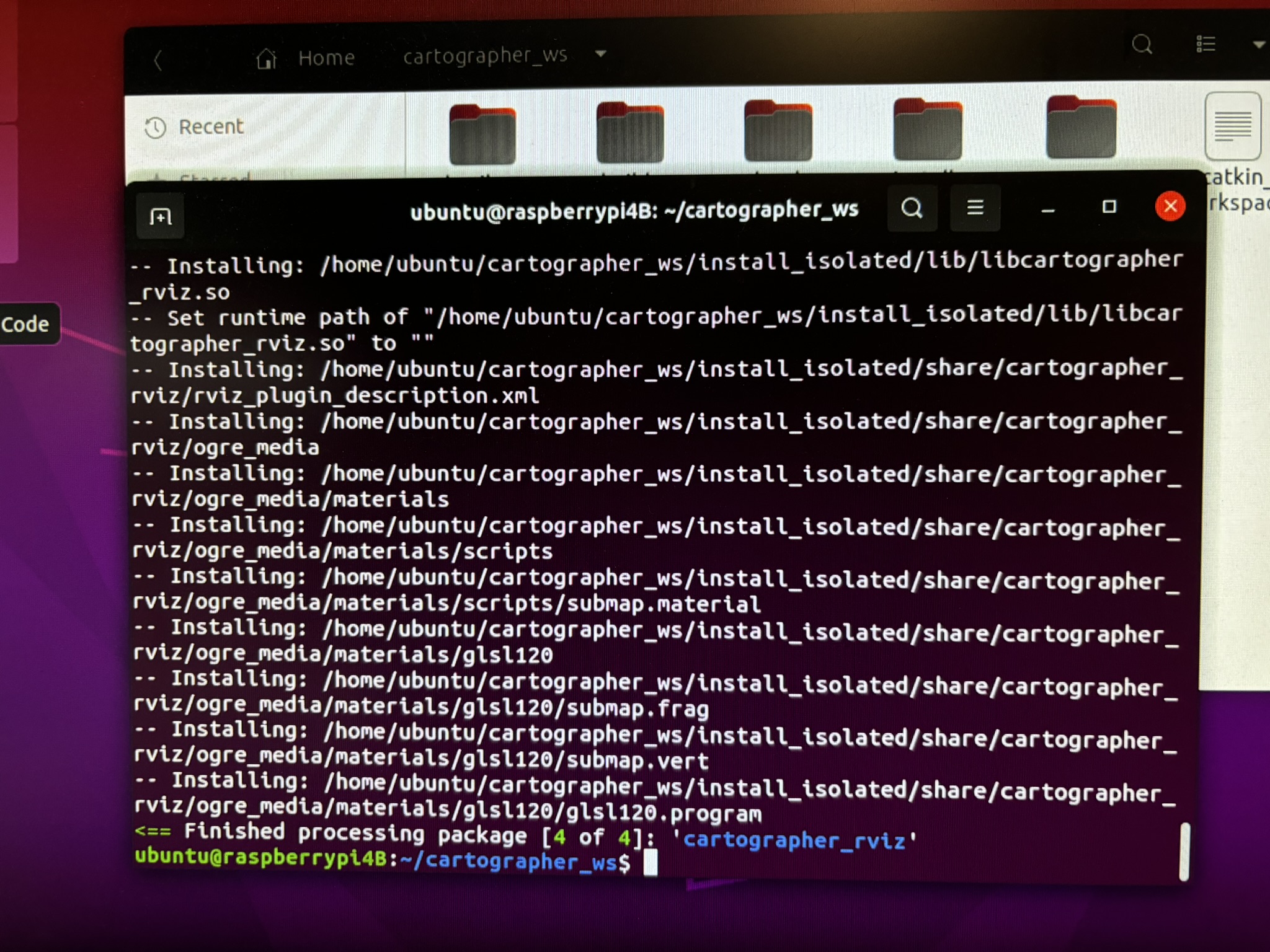

This project deepened my impression of open source: using open source is just a pain. The code keeps failing, and I guarantee that more than half of the code is error-reporting related. If their code really works well, they wouldn’t put that many error messages in there. Tutorials are outdated, key questions were never answered, and the developers only responded “here is the doc go read by yourself” and closed the post. I’m so sure that the Cartographer developers couldn’t install Cartographer successfully on the first try.

Using open source will generate endless problems by iteration. What does it mean? See code below:

void try_to_solve_the_problem( problem ){ // void, because it never returns

if (find_a_solution(problem)){

new_problem=generate_a_new_problem();

try_to_solve_the_problem(new_problem);

}

}I don’t know if it’s the hardware problem or software problem, it’s very lag when running on a 4GB RAM pi4B. I really suspect that ROS was not designed on this board. Raspberry pi 4B only has a 4 core CPU with the clock speed of only 600MHz, same as Teensy 4.0 while not overclocking. It’s too slow.

Pain.